The "Best" of Big Media

Large publishers who lobbied Google hard for a ranking boost got it when Panda launched:

“A private understanding was reached between the OPA and Google,” an office assistant with e-mail evidence told Politically Illustrated. “The organization is responsible for coordinating legal and legislative matters that impact our members, and one of the issues was applying pressure to Google to get them to adjust their search algorithm to favor our members.”

At the same time, said "premium publishers" were backfilling their websites padding them out with auto-generated junk created by companies like Daylife, where some of the pages offer Mahalo-inspired 100% recycled content.

My suspicion is that Google did not care about the auto-generated "news" garbage for a number of reasons

- it helps subsidize the big media interests

- they don't want to hit big media & cause a backlash

- it is quite easy for Google to detect & demote whenever they want to

- it gives Google more flexibility going forward when deciding how to deal with issues (if everyone is a spammer then Google has more flexibility in deciding how to handle "spam" to maximize their returns.)

It is the exact same reason that Google says link buying is bad, while tolerating "sponsored features" sections on large newspapers:

Machine Generated Journalism

Where Google winds up in trouble on this front is when start ups that create machine generated content go mainstream. (Unless Google buys them, then it is more free content for Google!)

The leaders of Narrative Science emphasized that their technology would be primarily a low-cost tool for publications to expand and enrich coverage when editorial budgets are under pressure. The company, founded last year, has 20 customers so far. Several are still experimenting with the technology, and Stuart Frankel, the chief executive of Narrative Science, wouldn’t name them. They include newspaper chains seeking to offer automated summary articles for more extensive coverage of local youth sports and to generate articles about the quarterly financial results of local public companies.

Official sources using "automated journalism" is a perfect response to Google's brand-focused algorithms:

Last fall, the Big Ten Network began using Narrative Science for updates of football and basketball games. Those reports helped drive a surge in referrals to the Web site from Google’s search algorithm, which highly ranks new content on popular subjects, Mr. Calderon says. The network’s Web traffic for football games last season was 40 percent higher than in 2009.

How expensive cheap is that technology?

The above linked article states that "the cost is far less, by industry estimates, than the average cost per article of local online news ventures like AOL’s Patch or answer sites, like those run by Demand Media."

Once again, even the lowest paid humans are too expensive when compared against the cost of robots.

And the exposure earned by the machine-generated content will be much greater than Demand Media gets, since Demand Media was torched by the Panda update AND many of the sites using this "algorithmic journalism" were given a ranking boost by Google due to their brand strength.

The improved cost structure for firms employing "algorithmic journalism" will evoke Gresham's law. This starts off on niche market edges to legitimize the application, fund improvement of the technology & "extend journalism" but a couple years into the game a company that is about to go under bets the farm. When the strategy proves a winner for them, competing publishers either adopt the same or go under.

That is the future.

Across thousands of cities, millions of topics & billions of people.

Even More Corporate Boosts

Just because something is large does not mean it is great across the board. Businesses have strengths and weaknesses. Sure I do like love shopping on eBay for vintage video games, but does that mean I want to buy books from eBay? Nope.

Likewise, Google's friend of a friend approach to social misses the mark. Do I care that someone I exchanged emails with is a fan of an athlete who promotes his own highlight reels? No I do not.

In a world where machine generated journalism exists, I might LOVE one article from a publication while loathing auto-generated garbage published elsewhere on the same site.

Line Extension & "Merging Without Merging"

At Macworld in 2007 Eric Schmidt said "What I liked about the new device and the architecture of the Internet is you can merge without merging. Each company should do the absolutely best thing they can do every time, and I think he's shown that today."

If you don't have the ability to algorithmically generate content to test new markets then one of the best ways to "merge without merging" is to sell traffic to partners via an affiliate program.

Google has no problem promoting their own affiliate network, investing in other affiliate networks, or inserting themselves as the affiliate.

Google is also fine with Google scraping 3rd party data & creating a content farm that inserts themselves in the traffic stream. After they have damaged the ecosystem badly enough they can then buy out a 2nd or 3rd tier market player for pennies on the Dollar & integrate them into a Google product featured front & center. (It is not hard to be better than the rest of the market after you have sucked the profits out of the vertical & destroyed the business models of competitors).

Others don't have the ability to arbitrarily insert themselves into the traffic stream. They have to earn the exposure. But if other people want to play the affiliate game, they need to have "brand."

Affiliates Not Welcome in the Google AdWords Marketplace

At Affiliate Summit last year Google's Frederick Vallaeys basically stated that they appreciated the work of affiliates, but as the brands have moved in the independent affiliates have largely become unneeded duplication in the AdWords ad system. To quote him verbatim, "just an unnecessary step in the sales funnel."

In our free SEO tips we send new members I recommend setting up AdWords and adCenter accounts to test traffic streams, so that you have the data needed to know what keywords to target. But affiliates need not apply:

Hello Aaron Wall,

I just signed up for the Get $75 of Free AdWords with Google Adwords. After receiving an e-mail stating that I was to call an 877 number of Google Adwords, I was told in my phone call that affiliate marketing accounts were not accepted. I guess I confused by this statement. Is this in error? Or am I not understanding the Tip #3 for setting up an account for Google Adwords for promoting a website?

Thank you in advance for your time.

Sincerely,

Carole

The same Google which allows itself to shamefully carry a "get rich quick" AdSense category considers affiliate marketing unacceptable.

Non-AdSense Affiliates Classified as Doorway Pages, Not Welcome in the Organic Search Results?

The exact same thing is happening in the organic search results right now. Maybe not on your keywords & maybe not today, but if you are an affiliate, the trend is not your friend. ;)

I have heard recently from multiple friends that some of their affiliate sites were penalized for being doorway & bridge pages. At the same time, another friend showed me some BeatThatQuote affiliates ranking thin websites.

What is worse, is that in many instances, Google considers networks of similar sites to be spam. Yet at the same time the quickly growing Google Ventures is investing in companies like Whaleshark Media - a roll up currently consisting of 7 *exceptionally* similar websites in the same vertical.

Larger companies like BankRate can run a half-dozen credit card affiliate websites & an affiliate network. And they can create risk-adjusted yield by buying out smaller competitors, largely because Google won't penalize them based on the site being owned by a fortune 500. However the independent affiliate is forced to sell out early due to the risk that Google can arbitrarily decide they are a doorway site at anytime.

The absurd thing is that if independent webmasters don't include revenue generation in their website then they don't have the capital *required* to invest in brand & further improving their website. How do you compete against automated journalism when Google gives the automated content a ranking boost? And if you want to do higher quality than the machine generated content, how do you hire employees if you are not even allowed to monetize?

I suppose there is AdSense.

Even though AdSense publishers are Google's affiliates they are still welcome to participate in Google's ecosystem.

Risks to Small Businesses

Small businesses not only have to compete against algorithmic journalism, Google's algorithmic bias toward brands, arbitrary "doorway page" editorial judgements cast against them by engineers & significant algorithm changes, but they also have to deal with loopholes Google leaves in the system that allow them to be arbitrarily removed from the ecosystem.

Google showing you "closed in error" wouldn't be such a big deal if they didn't copy code, violate patents, deal in patents to spread their ecosystem, aggressively bundle & advertise, engage in price dumping, and behave in other anti-competitive ways to put their "incorrect facts" in front of billions of people.

The big issue Google is facing on the content quality front is the incentive structure. They have got that wrong for a long time now. They may think that these big changes are motivating people to improve quality, but realistically the lack of certainty is prohibiting investment in real quality while ramping investment in exploitation.

How can anyone invest deeply over the long term in a search ecosystem where Google...

Google would spin Performics out of DoubleClick, and sell it to holding firm Publicis.

Only one major force inside of Google hated the plan. Guess who? Larry Page.

According to our source, Larry tried to sell the rest of Google's executive team on keeping Performics.

"He wanted to see how those things work. He wanted to experiment."

The problem with that is that most honest economic innovation (eg: not just exploitation) comes from small businesses. Going into peak cheap oil where food riots are becoming more common & pensions are about to blow up, we need the kings of information to encourage innovation, rather than relying on doing whatever is easy & trusting established old leaders while retarding risk taking from (& investment in) start ups.

In some markets being successful means staying small, building deeper into a niche, and keep adding value until you have a strong position. However some ecommerce sites that were not associated with big brands were torched by the Panda update.

Betting on Brand

As Google has tilted their algorithm toward brand, some ecommerce companies that focused on winning relevant niches are now watering down their competitive advantages by betting the company on brand:

CSN Stores is today consolidating its 200+ shopping sites into a single ecommerce website under one brand: Wayfair.com.

...

So why the change to Wayfair.com? Primarily for obvious branding reasons: the company has long been spending a huge amount of money on marketing a lot of separate websites, and now they can focus on advertising just one.

...

Other reasons for the consolidation of the separate shopping site are search engine optimization – which was apparently much needed after Google’s recent Panda update – and the fresh ability to make recommendations to shoppers based on their collective purchase history.

But, as some brands abuse Google the same way the content farms did, is that a good bet? I don't think it is.

What is so Bad About Content Farms?

- low quality

- headline over-promises, content under-delivers

- anonymously written

- written by people who are often ignorant of what they are writing about

- add nothing new to the ecosystem, just a dumbed-down reshash of what already exists

- done cheaply & in bulk, in a factory-line styled format

- contains frequent spelling and grammatical errors

- primarily focused on pulling in traffic from search engines

- exists primarily to promote something else (ads or the above-the-fold ecommerce product listings)

- etc. etc. etc.

Such behavior is *not* unique to the sites that were branded as content farms & is quickly spreading across fortune 500 websites.

Big Brands Become Content Farms

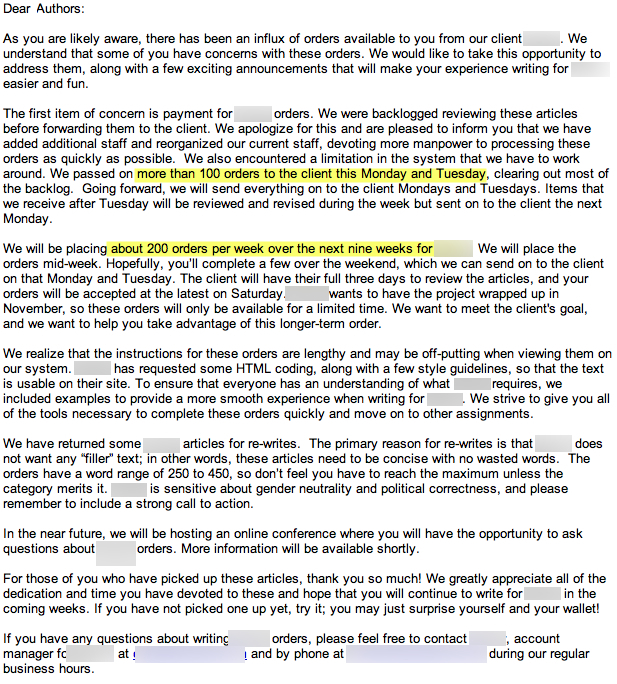

A friend sent me an email which highlighted how a well-known brand was ordering thousands of pieces of "content" in bulk for their branded site.

Here is the email, with blurring to protect the guilty.

The only difference between the "content farms" and the branded sites engaging in content farming is the logo up in the top-left corner of the page. The business process from how the content is created, to who it is created by, to what they are paid to create it, to the interface it is ordered through, on to how it is published is exactly the same.

Many of the same authors who had some of their eHow "articles" deleted are now writing dozens of "articles" for fortune 500 websites.

When Panda happened & I saw corporate doorway pages (& recycled republished tweets) ranking I hinted that we could expect this problem. I thought it would start with parasitic hosting on branded sites & maybe a few opportunistic brand extensions.

Then I expected it would likely take a couple years to go mainstream.

But with the economy being so weak (and back in yet another undeclared recession, actually honestly never having left the last one) this shift only took 6 months to happen. At this point I expect it to spread quickly, especially as the economy gets worse. The above fortune 500 company is one that got a strong boost from Panda & as their downstream traffic from Google picks up over the next month or 2 you can expect many of their competitors to copy the strategy.

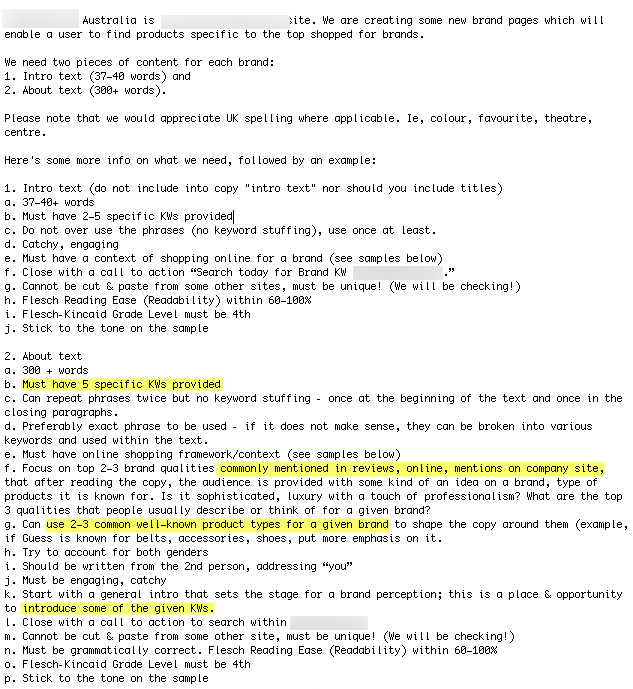

This isn't a US-only phenomena. A community member sent me the following, from another fortune 500 company.

Now that fortune 500s are doing almost everything that smaller players could do (but with more capital, more scale, more algorithmic immunity, requiring smaller players to link to them to be listed & in some cases while replacing humans with algorithms) AND get the Google brand boost the future is growing more uncertain for independent webmasters that lack brand, relationships, and community.

Big brands are basically pushed across the finish line while smaller webmasters must run uphill with a 80 pound backpack full of gear - in ice & snow, naked, while being shot at. What's worse, is that brands are now being bought, sold & licensed - just one more tool in the marketer's toolbox (presuming you have the cash).

disclaimer: I am not saying that all content farming is bad (I am fairly agnostic...if it works & people like it, then it works), but the above trend highlights the absurdity of Google's notion of whether something is spam based not on the offense, but rather who is doing it, especially as big brands just quietly turned into content farms.