There has been a lot of talk in the SEO Community about Social Rank

And some talk that it might die soon.

What Is "Social Rank"

As far as the SEO is concerned, social rank is the idea that Google, and other search engines, use social networking indicators in their ranking algorithms. If you get mentioned and linked to often, from social media profiles, this helps your site rank in the search engines.

Check out Danny Sullivan's Q&A session on this topic with Google and Bing representatives.

Do you calculate whether a link should carry more weight depending on the person who tweets it?

Yes we do use this as a signal, especially in the “Top links” section [of Google Realtime Search]. Author authority is independent of PageRank, but it is currently only used in limited situations in ordinary web search

Google intimate it's tied in with PageRank, which Danny also discusses.

To some degree, “humans” on the web have pages that already represent their authority.

For example, my Twitter page has a Google PageRank score of 7 out of 10, which is an above average degree of authority in Google’s link counting world. Things I link to from that page — via my tweets — potentially get more credit than things someone whose Twitter page has a lower PageRank score. (NOTE: PageRank scores for Twitter pages are much different if you’re logged in and may show higher scores. This seems to be a result of the new Twitter interface that has been introduced. I’ll be checking with Google and Twitter more about this, but I’d trust the “logged out” scores more).

Google is a vote counting engine, so it isn't surprising they count votes from social network sites. It should also come as no surprise Google uses Twitter to help determine interest in news events, as the Twitter platform lends itself to news. This will then flow through into their news ranking. There are also the indirect benefits i.e. the attention generates articles and commentary, which then link back to your site.

All links are valuable, because attention - human, spider, or both - travels along them. Google will always be interested in who is paying the most attention to what. If people are using social networks to do that, then that is where Google needs to be.

Of course, like search, Social Media it is open to abuse.

How To Do Blackhat Social Rank

Black or grey, here are a few of the more aggressive tactics in use:

- Fake Profiles - auto gen an entire network of friends

- Duplicate/Fake Content - plenty of auto-gen tools about that will make posts and requests on your behalf

- Pay Important People To Tweet Your Link As Editorial - or put your link on their profile page

- Buy Social Media Accounts

You might have spotted a few more.

The social services will, of course, combat any threat they deem detrimental to their business. Just like in search, the game will be never-ending, as the blackhats find holes in the system, and the engineers plug them. And just like times past in SEO, the ethical debate rears its head.

Is it morally "right" or "wrong" to use technique X, Y and Z?

All a bit silly, really. People will use a technique regardless of other people's ethical dilemmas, so long as it works. It's up to the social networks, and Google, to stop what they might consider abusive practices from working, or paying off.

And they will, although they've probably got their work cut out for them. It's one thing to look at a page about, say, fitness and determine the links running along the bottom for "ring tones", "bad credit loans" and "viagra" are likely dodgy, but another thing to look at profile activity and determine whether there is a human behind it.

Social media is evolving quickly, and it will take time to patch issues, both technically and culturally. So I'm sure the blackhats will be having fun for some time yet.

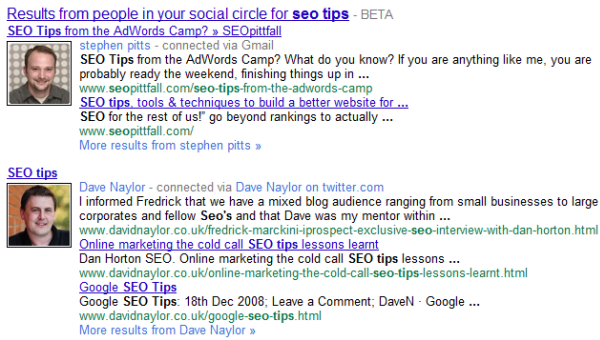

Personalized Social Recommendations

Google sometimes may list results from your "social circle" at the bottom of the organic search results. The good thing about these results is that most of the recommendations are fairly transparent & benign.

Bing is displaying Facebook like data & Blekko is pushing harder at integrating Facebook likes into their algorithm as well.

A "like" might have multiple meanings depending on who is doing it. Do the votes for this page "like" Google, PPCBlog, PPCBlog's explanation of Google, search in general, algorithms, SEO, infographics, technology, marketing, or ...?

In search there is a concept of stop-words, which are words that would not be counted much because they are so common they don't really tell you much about a piece of content. Some keywords (say mesothelioma) have a higher discrimination value than others (say the). A "like" it doesn't have a great discrimination value, largely because you don't know why someone liked something. The nuanced subtleties are lost without context. Something might be liked because it is clever, in-depth, correct, humorous, offensive, and incorrect - all at the same time! It all comes down to interpretation & perspective.

Some people will offer tips on "scaling your social footprint" and such, but the trade off is that on networks where relationships are reciprocal (like on Facebook) you can't add a friend without having that friend added to your account. Brands, on the other hand, can offer an endless array of discounts and promotions. If a search engine puts too much weight on likes then companies will simply run giveaways, contests, and pricing specials to collect votes.

"Likes" are so low effort they will be easily manipulated, even amongst real account holders. Over time these votes will be every bit as polluted as the link graph (or maybe moreso) because there are so many ways to influence people individually (click the below like button for $2 off your order, etc). Such offers might fall outside of the terms of service of some networks, but it is worth noting that when Google was promoting their reviews service they violated their own TOS.

In addition to likes being easy to manipulate, some flavors of social are heavily spammed because many people use the tools simply for reciprocal promotion. I likely have over 1,000 friends on Facebook & yet I have no idea who 90%+ of the people are. Am I recommending the stuff that some of those people recommend? An algorithm that assumes I am is likely leading people astray. And you might be friends with someone while knowing that their business life is quite shady when compared against their personal life (or the other way around). Are you endorsing everything a person does?

Further, anyone can invest in creating one piece of great content that scores tons of "likes" while operating in an exploitative manner elsewhere (and/or later). It is just like the wave of bulk unsolicited emails I get promoting 'non-profit' directories which one month later require 3 or 4 page scrolls to get past all the lead generation forms, while yet claiming to be non-profit. :D

And social networks decay over time:

- Friendster lost out due to bad management, and MySpace the same.

- GeoCities closed last year. Delicious has had an upswing in spam, and Yahoo! has it scheduled for sunset soon.

- And even outside of those sorts of broad platform shifts, people change over time. Years ago I might have recommended working with someone like Patrick Gavin or Andy Hagans, but I wouldn't dare do so today. Likewise a particular tip or product might be exceptionally profitable for a period of time & then eventually decay to a near sure bet money loser. Opportunities do not last forever. Marketers must change with the markets. Other products might have undesirable side effects that later come to surface. Add in media based on more precise measurements & pageview chasing, and the conflicts between recommendations + media coverage will scare some folks into not participating. Associating recommendations with individuals will cause blowback as some of the seeds turn sour & people blame the person who recommended them to the person/product/service that screwed them over. The link graph allows those with undesirable reputations to slowly fade into obscurity, whereas old likes remain in place & can cause a social conflict years down the road.

Using Social Media For SEO Purposes

A link is a marker of attention.

Google will always want to count markers of attention. Blackhat trickery aside, in order to make social media work for you, and create side effects in terms of ranking, you should build both a presence in social media, and a craft messages that are likely to be spread by social media.

It's much like PR. Public Relations, as opposed to PageRank.

Start by defining your audience. Who do you know that talks to that audience? Try to get to know as many people as possible in your audience, especially the movers and shakers who already talk with them.

Get movers and shakers to spread your message. That may involve payment of some kind. Reciprocation, favor, cash, drugs, booze, hookers. Whatever works.

Joke.

Or - and this is probably the most effective path - craft a message so interesting, they'll find it hard not to spread.

Think about how you spin your message. Think in terms of benefit. How will the audience benefit from knowing this information? What is in it for them? What are they curious about? Feed their curiosity. Sometimes, it's not the message, but the way it is stated.

Plan ahead. Can you spin your message around a public event, like a holiday? Or a current event? Or a popular personality?

Get out and meet people face-to-face. People are much more likely to be receptive to your ideas if they really do know you.

But there is a danger in overthingking this stuff. A few well placed links to a site can still get you top ten in Google, even if you have no social media presence at all. Social media is just another string to the bow.