SEO For Designers & Programmers

In this guide, we'll introduce designers and developers to SEO, and recommend low-impact, highly effective strategies to integrate SEO into the work-flow.

Background

What Is SEO?

Search Engine Optimization is the process of making a site more visible in search engine results. The higher the visibility, the more traffic a site should receive.

Why Bother With SEO?

Essentially, SEO is a marketing exercise.

If a site can't be found in search result listings, it is unlikely to be found by the 50% of all internet users who use a search engine every day (PDF).

Given Google's ubiquitous hold on the web, SEO can be the difference between make or break for web sites.

Can't The Search Engines Just Work It Out?

The search engines are clever, but they're not perfect.

If you want to know the technical aspects of how a search engine works, check out "The Anatomy Of A Large-Scale Hypertextual Web Search Engine" written by Google's founders, Sergey Brin and Larry Page.

In short, a search engine is a database. The database is populated by data the search engine gathers. It gathers this information by sending out spider. A spider is a program that resembles a web browser, all albeit a very basic one. It gathers the HTML code, and sends in back to the database for sorting.

The main areas of concern are:

- Can the spider crawl the site?

- Will it rank the site pages above those of the competition?

SEO helps assure those two things happen.

What Does SEO Involve?

The two main elements of SEO are:

- On-site factors

- Inbound linking from external sites

The aim is to ensure the site pages can be crawled by search engine spiders, and increase the likelihood those pages will rank well. The exact formula whereby a page ranks is a matter of guesswork. However, there are core aspects when, if integrated well, significantly increase the chances of ranking.

Firstly, pages must be crawlable by a search spider.

Secondly, the pages should be in a format likely to result in search traffic.

This typically involves aligning page content with keyword queries. For example, if a website owner wants to rank for the search term "web designers Seattle", they may include a page entitled "Web Designers Seattle" on the site, and that page will contain tightly focused information on that one topic. The ranking process is more complicated that this, but the important point to note is that searcher intent and site content should be closely aligned.

Thirdly, pages should have a number of links pointing to them from other sites. This aspect is largely out of the hands of designers and programmers, but if the content is not easy to link to, then problems can arise.

So, what aspects do you need to integrate into your process?

Let's start with designers*.

*There is a high degree of cross-over in terms of tasks between designers and programmers, so you should familiarize yourself with both sets of guidelines below

Notes For Designers

Failure to integrate SEO into the work process can lead to significant site redesign later on, however integration is relatively painless if implemented early.

SEO is most effective when it forms part of the site brief and specification.

Separate Content From Presentation

The leaner the code, the faster a page will load. The faster a page loads, the more likely it is that the spider will be able to crawl the entire site. Bloated code can also cause problems in terms of the weighting the search engine algorithms gives to elements on the page. Style sheets, java script and other code should be called from a separate file, rather than embedded.

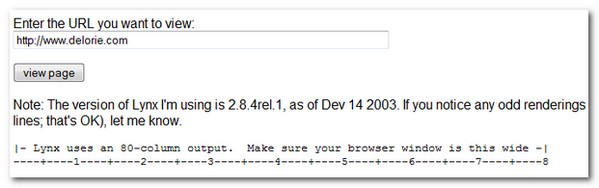

Navigate The Site With A Text Browser

A search engine spider is similar in function to a text-only browser. So, if you can navigate a site using a text-only browser, the site will be able to be crawled by a search spider.

Whilst spiders are getting better at handling media content, they can have problems both crawling it and deciphering it.

If you site features a lot of media content, particularly in terms of navigation, make sure you have an alternative navigation option that a spider can follow. Spiders prefer HTML links in compliance with WC3 coding standards.

Here's a text-only browser simulator

If your pages feature a lot of graphical or video content, use body content to describe it.

Flash

It's a myth you can't use Flash.

The key to using Flash lies in the method of integration. Avoid using Flash as the main method of navigation and content delivery. Instead, embed flash within HTML pages that contain other HTML content and links.

The search engines are getting better at crawling Flash, but they don't handle it as well as they handle HTML. If a search engine experiences problems indexing a site, it is likely to back out, leaving other parts of the site unindexed.

It can also be difficult, and sometimes impossible, to link to individual Flash pages. Pages that aren't well linked are less likely to show up in search results.

Adobe has a solution that involves an API and the Flash Player run-time, which allows search engines to read the content of SWF files. You can find out more in "The Black Magic Of Flash SEO"

If a site must remain all Flash, create a "printer friendly" version of the site that contains all the same text data as the flash site. If this version of the site is well linked, the search engines will crawl it.

Keep in mind these are workarounds and are not ideal. If your competitors' sites make it easy for the search engine by not using Flash, they are likely to rank ahead of you, all else being equal.

Keep Site Architecture Simple

The basic rules of usability also apply to SEO.

Keep your important pages within easy reach. That typically means close to the top of the hierarchy.

If possible, try to incorporate a site map and make sure it is linked to from every page. That way, the spider is less likely to miss crawling content.

Use Text Headings

Headings should use HTML text, as opposed to graphics. Search engines place importance on keyword terms contained within links and heading tags.

If you need to use graphic headings for some pages, ensure the alt tags are populated, and/or the page deprecates to a text default if graphics are turned off.

It is also highly recommended that you repeat the heading in HTML text somewhere else on the page, or in the link text of any page pointing to that page.

Mark-Up The Code

The following tags should be populated with keyword data. The SEO will want to alter the content of these fields.

- Title

- Alt

- Meta Description

Other tags often manipulated by SEOs include:

- H1, H2, H3 etc

- Bold or Strong

- Hyperlinks - particularly the link text

- Body text

Frames

Where possible, avoid using frames.

If the site isn't coded correctly, the search engine may simply index the master frame, or the search visitor may arrive at a page that is impossible to navigate, because the page is viewed out of context.

If you do use frames, use a simulation spider to highlight any problem areas. You could also use the no-frame tag, although the search engines aren't overly fond of data contained in this tag due to abuse i.e. showing one thing to viewers, and something different to spiders.

For more specific information on frames, check out Search Engines And Frames.

Notes For Developers

Use Descriptive File Names

The search engines look for keywords in file names in order to help make sense of a page. Where possible, use descriptive and meaningful file names, such as acme.com/hello-world.htm, as opposed to numbers, such as acme.com/1234567.php.

Likewise, directories should also be descriptive and be clearly organized:

Good: cars.com/maufacturer/ferrari/

Poor: cars.com/dir/manf/fer/2/

If you need to use coded URLs, a suitable workaround is use URL rewriting. Here's a tutorial about URL redirection, and other useful URL techniques.

URL Structure & Cannonicalization

Try to avoid placing too many parameters in URLs. This will dilute the descriptive benefits of the file name, making it difficult for the search engine to derive meaning.

Good: cars.com/aboutus.php

Poor: cars.com/aboutus.php?2323234443434=34&data=12&tracking=569djf88377656

Canonicalization is also an issue for SEO.

Canonicalization is the process by which URLs are standardized. For example, www.acme.com and www.acme.com/ are treated as the same page, even though the syntax of the URL is different. Problems can occur when the search engine doesn't normalize URLs properly. For example, a search engine might see http://www.acme.com and http://acme.com as different pages. In this instance, the search engine has the host names confused.

We've written a detailed post on Canonicalization entitled "URL Canonicalization: The Missing Manual".

It is preferable to to link to a folder URL eg. site.com/folder/ as opposed to an absolute URL which specifies the file name. The link structures SEO's set-up are sensitive to changes in the way the document is specified. For example, inbound links may be lost, which may lead to a loss in ranking.

Major switches later on - say, a change in CMS - can cause headaches and a considerable amount of reworking if all the URLs are specified explicitly.

Links

Internal links should follow W3C guidelines. Search engines place value on keywords contained in standard text links.

It is best to avoid coded, graphical or scripted links. If using these types of links, duplicate the linking structure elsewhere on the page. In the footer, for example.

There should be no more than 100 links per page, and ideally a lot less.

Create A Google SiteMap

A Google Site Map is an xml page used to help Google crawl websites. Here are the Site Map guidelines and instructions.

Sitemaps are easy to create, and provide an elegant and painless workaround if your internal linking structure is not optimized.

By including a Site Map, you also provide an default for any crawl related issues you may not be aware of.

Custom 404

If a search engine spider encounters a 404 page, it may back out if there are no links to follow off that page.

Create a customized 404 page that contains links to parts of the site that contain links and aren't likely to be removed i.e. home page and site map.

Robots.txt

A robots txt page is the first page a spider reads. You can specify any areas which you don't want the search engine to index. Not all search engines will obey the directives specified in the robots txt, so don't use this as your sole means of ensuring data stays out of the public domain.

You can find out more about the robots.txt file here.

Points Of Conflict Between SEO & Design/Development, And How To Resolve Them

A lot of conflict can be resolved simply by involving the SEO early in the site development process. The benefit in doing so means far less pain for designers and developers that if SEO is bolted on as an after-thought. It's like building a house, then deciding you need to relocate the kitchen.

SEO should be part of the design and development specification.

But what if you have a legacy site?

Here's some requirements you'll likely hear from SEO's:

1. The Site Design Needs To Be Changed

SEO is mostly about providing the search engine with text data. If a site doesn't have much in the way of text, the SEO will look to add text to existing pages, and beef out the site with additional text content.

There was a time when SEOs only needed to add meta-data, however search engines no longer pay much attention to meta data. They look at two areas: visible site content and link structures.

The biggest impact to design is likely to be on the home page. The home page usually carries the most weight with search engines.

Here are some suggestions for preserving design and integrating SEO:

- Add text below the fold

- Replace graphical headings with text headings

- Separate form form content

- Include a printer-friendly version of the site

2. We Need To Provide Link-Worthy Content

Search engines place a lot of value on links from external sites. If your site doesn't contain much in the way of notable content, it will be difficult to get links.

Consider integrating a publishing model. For example, you could add a blog, news feed or forum.

Do you have a lot of offline content that could be published online? Whilst such content may not be considered important in terms of your web presence, it can be used to cast a wide net. The more content you have, the more pages you'll likely see in the index, and the more search visitors you'll receive. This content can be relegated in the hierarchy so that non-search visitors aren't distracted by it, and your main site design isn't affected.

Such content especially useful if this content is informational, as opposed to commercial, in nature. It is often difficult to get links to purely commercial content.

Tools & Techniques For Monitoring, Diagnosing & Rectifying SEO Problems

If a site can't be crawled, then it won't appear in search engine result pages. Use the following tools to detect and fix problems.

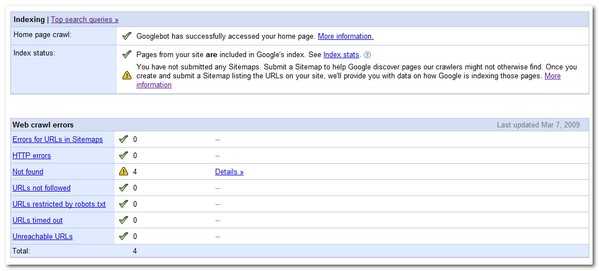

Google Webmaster Central

Google Webmaster Central provides a host of diagnostic information and is available free from Google. The service will tell you what pages couldn't be crawled, what the errors were, how many duplicate tags you have, and visitor information.

You can also build and submit your xml site map, which can solve a number of crawl issues.

Text Browser

If you can successfully navigate around your site using a text browser, then your site can be crawled by a spider.

Pay careful attention to any pages that prevent navigation problems, and resolve by placing text links on/to these pages.

SEOBook Toolbar

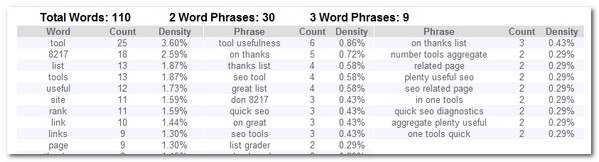

The SEOBook Toolbar provides details about page popularity, the level of competition, and on-page diagnostics.

One way you can use this tool is to determine if the search engine knows what your page is about by looking at the frequency of words on the page. If those words are mostly off-topic, you may need to include more keyword phrases throughout your copy.

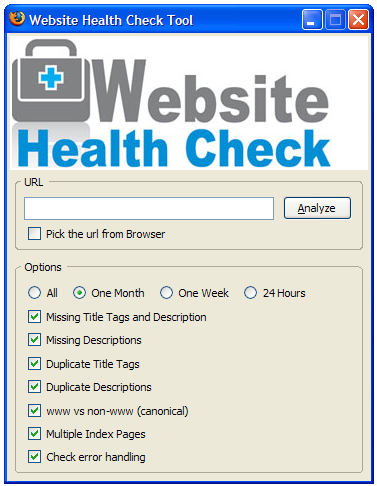

SEOBook Health Check

The SEOBook Health Check tool also provides a wealth of troubleshooting data. You can determine if you've got error handling issues, duplicate content, and conical URL issues.

SEO Resources For Designers & Developers

- SEO Glossary

- Baking SEO Into The Company Workflow

- SEO As A Change Process

- SEO Guide For Designers

- How To Determine The Effectiveness Of Your Internal Link Structure

- On-Page Optimization

- Site Architecture

- Web Developers Cheat Sheet

- Robots Control

- Separate content from presentation with XSLT, SimpleXML, and PHP 5

New to the site? Join for Free and get over $300 of free SEO software.

Once you set up your free account you can comment on our blog, and you are eligible to receive our search engine success SEO newsletter.

Already have an account? Login to share your opinions.

Comments

Add new comment