Review of Jim Boykin's Free Broken Link Tool

Jim Boykin recently released a free, but powerful tool, that can help you check on broken links, redirects, in addition to helping you generate a Google Sitemap.

Being a free, web-based tool you might think it's a bit lightweight but you'd be wrong :) It can crawl up to 10,000 internal pages, up to 5 runs per day per user.

In addition to the features mentioned above, the tool offers other helpful data points as well as the ability to export the data to CSV/Excel, HTML, and the ability to generate a Google XML Sitemap.

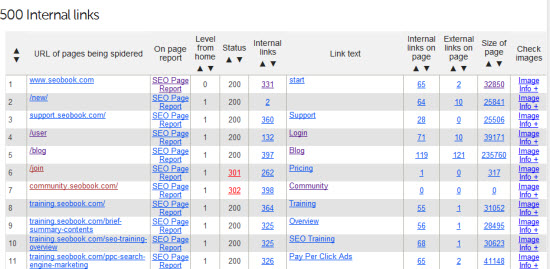

The other data points available to you are:

- URL of the page spidered

- Link to an On-Page SEO report for that URL

- Link depth from the home page

- HTTP status code

- Internal links to the page (with the ability to get a report off the in-links themselves)

- External links on the page (a one-click report is available to see the outlinks)

- Overall size of the page with a link to the Google page speed tool (cool!)

- Link to their Image check tool (size, alt text, header check of the page)

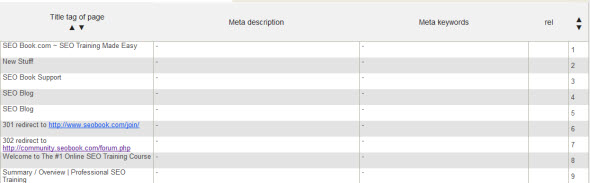

- Rows for Title Tag, Meta Description, and Meta Keywords

- Canonical tag field

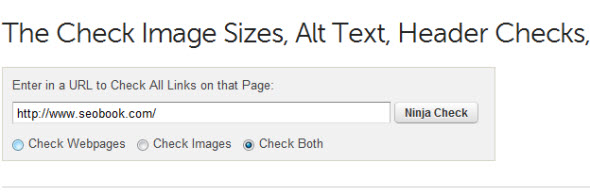

Using the Tool

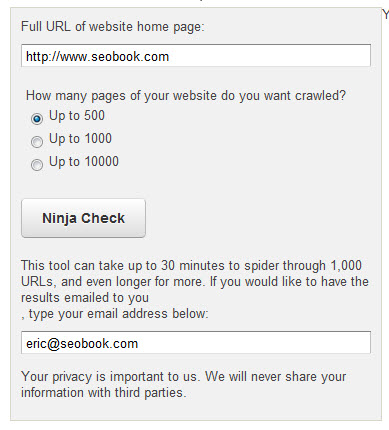

The tool is really easy to use, just enter the domain, the crawl depth, and your email if you don't care to watch the magic happen live :)

For larger crawls entering your email makes a lot of sense as it can take a bit on big crawls:

Click Ninja Check and off you go!

Working With The Data

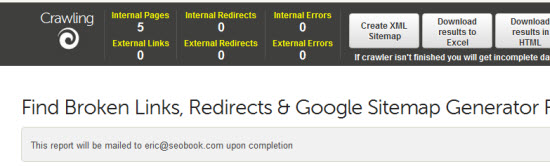

The top of the results page auto-updates and shows you:

- Status of the report

- Internal pages crawled

- External links found

- Internal redirects found

- External redirects found

- Internal and External errors

When you click any of the yellow text(s) you are brought to that specific report table (which are below the main results I'll show you below).

This is also where you can export the XML sitemap, download results to Excel/HTML.

The results pane (broken up into 2 images given the horizontal length of the table) looks like:

More to the right is:

The On Page Report

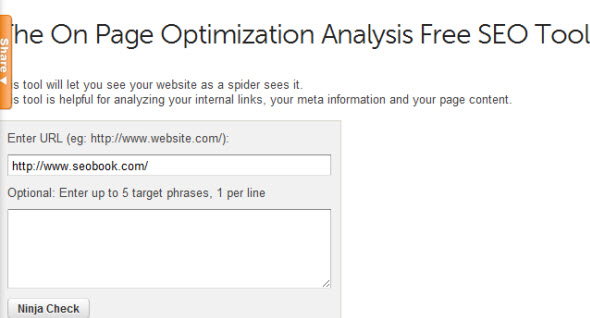

If you click on the On Page Report link in the first table you are brought to their free On-Page Optimization Analysis tool. Enter the URL and 5 targeted phrases:

Their tool does the following:

- Metadata tool: Displays text in title tags and meta elements

- Keyword density tool: Reveals statistics for linked and unlinked content

- Keyword optimization tool: Shows the number of words used in the content, including anchor text of internal and external links

- Link Accounting tool: Displays the number and types of links used

- Header check tool: Shows HTTP Status Response codes for links

- Source code tool: Provides quick access to on-page HTML source code

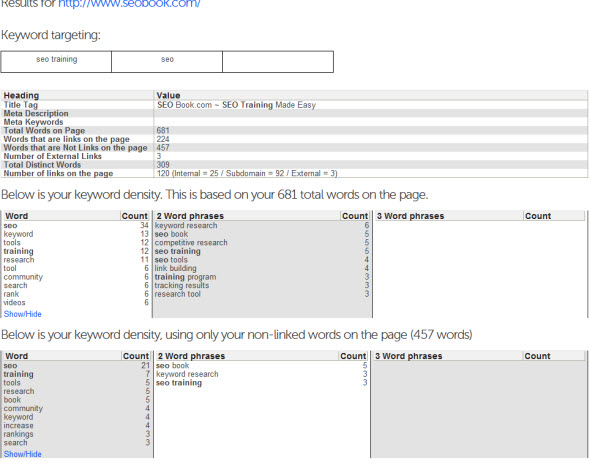

The data is presented in the same table form as the original crawl. This first section shows the selected domain and keywords in addition to on-page items like your title tag, meta description, meta keywords, external links on the page, and words on the page (linked and non-linked text).

You can also see the density of all words on the page in addition to the density of words that are not links, on the page.

Next up is a word breakdown as well as the internal links on the page (with titles, link text, and response codes).

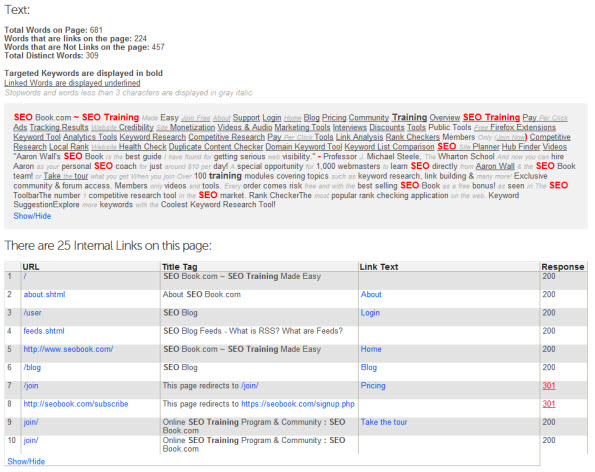

The word cloud displays targeted keywords in red, linked words underlined, and non-linked words as regular text.

You'll see a total word count, non-linked word count, linked word count, and total unique words on the.

This can be helpful in digging into deep on-page optimization factors as well as your internal link layout on a per page basis:

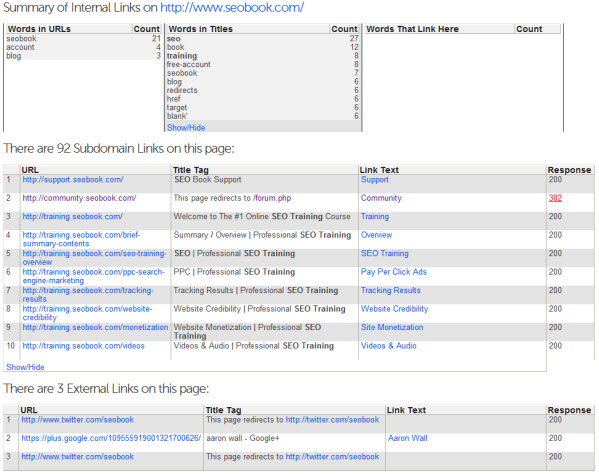

Next, you'll get a nice breakdown of internal links and the text of those links, the titles, and the words in the url.

Also, you can see any links to sub-domains as well as external links (with anchor text and response codes):

Each section has a show/hide option where you can see all the data or just a snippet.

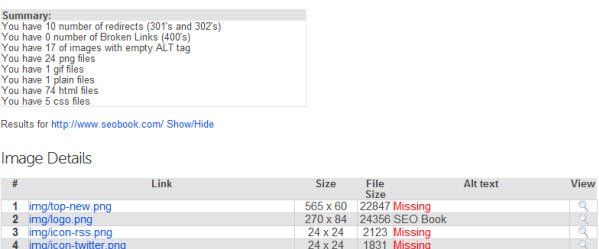

Another report you get access to is the image checker (accessible from the main report "Check Image Info" option):

Here you'll get a report that shows a breakdown of the files and redirects on the page in addition to the image link, image dimensions, file size, alt text, and a spot to click to view the image:

After that section is the link section which shows the actual link, the file type (html, css, etc), status code and a link check (broken, redirect, ok, and so on)

Additional Reports

The main report referenced at the beginning of this post is the Internal Page Report. There are five additional reports:

- External Links

- Internal Redirects

- External Redirects

- Internal Errors

- External Errors

External Links

This report will show you:

- HTTP Status

- Internal links to the external link

- Actual link URL

- Link anchor text

- Where the link was first found on the domain

Internal and External Redirects

- HTTP Status

- Internal links to the external link

- Actual link URL

- Link anchor text

- Page the URL redirects to

Internal and External Errors

Give it a Spin

It's free but more importantly it's quite useful. I find a lot of value in this tool in a variety of ways but mostly with the ability to hone in on your (or your competitor's) internal site and linking structure.

There are certainly a few on-page tools on the marketing but I found this tool easy to use and full of helpful information, especially with internal structure and link data.

Try it. :)

Comments

Good to see people are still making free web tools!

Hey friends, i this tool... it is very good for seo in checking for broken page and crwaling error and so on....!!!

So how do we go about blocking this bot from our sites? I emailed Jim Boykin and asked and didn't get a reply.

I can't see any reason at all that you would want to allow other people to run this on your site... it allows them to burn up your bandwidth while compiling data to use against you.

I think services like Majestic are fair game to run on other people as you aren't using their own resources but surely something like this you need permission for? My website t&c's are very clear on the fact I am only allowing human visitors to use the site, outside of what I have explicitly allowed via robots.txt. I don't want this service used on my website, unless it is by me.

...services like Majestic (or Ahrefs or OSE) use resources of sites to crawl their data and create a database of links. Each new user might not create a new crawl, but all the link data sources that do crawl the web are of course using bandwidth of hosts to build their databases.

Stilettolimited,

sorry, I never got your email.

first, we do honor robots.txt like any other spider. We limit the crawl to 1000 pages unless you prove you own the site by adding a robots.txt of

User-agent: NinjaBot

Allow: /

We also limit the tool to run only 5 times per day.

so yes, someone could run this tool on your site for 5000 pages per day...but this is the limit.

how would you stop any crawlilng tool like screamingfrog or xenu link slueth?....you can't...If we didn't allow it, there are certainly other places one could go to crawl your site (with no limits at all).

...but, I tell ya what... I'll add in a new feature...if you don't want our tool to crawl your site, add:

User-agent: NinjaBot

Disallow: /

(give us until the end of the week to impliment this feature)

that will stop our tool from spidering your site, but it won't stop a competitor from running something like zenu link slueth on your site (which will give them the same results)

Add new comment