Majestic SEO - Interview of Alex Chudnovsky

I have been fond of the depth of Majestic SEO's data and the speed with which you can download millions of backlinks for a website. While not as hyped as similar offerings, Majestic SEO is a cool SEO service worth trying out, and their credit based system allows you to try it out pretty cheaply (unless you are trying to get all the backlinks for a site as big as Wikipedia)...as the credits depend on the number of inbound linking domains.

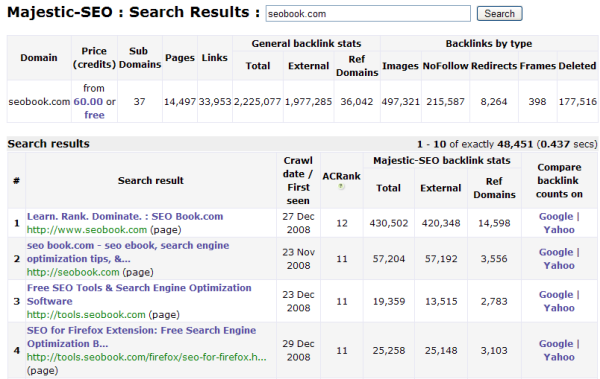

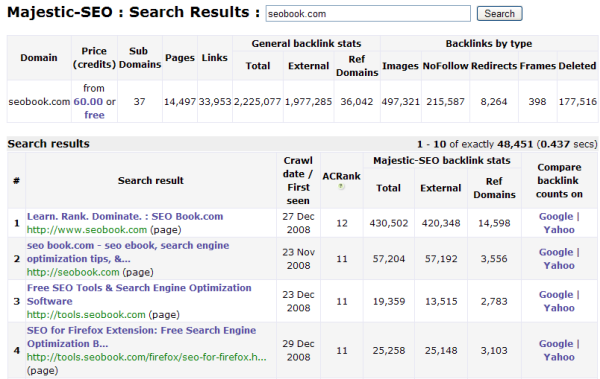

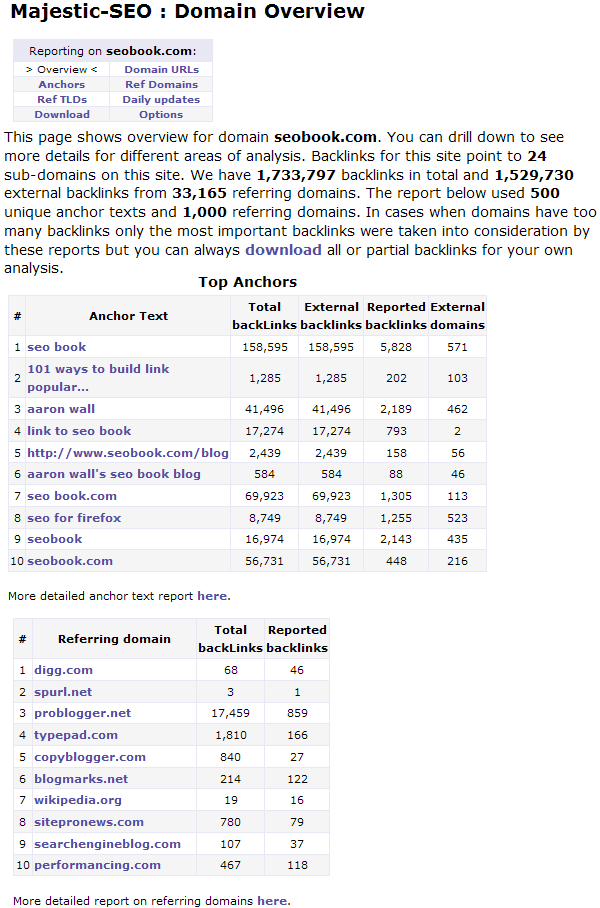

They give you data on your own domain for free, and share a nice amount of data about third party sites for free. For instance, anyone can look up the most well linked to pages on SEO Book free of charge

What made you decide to create Majestic SEO?

We arrived to it naturally - our main aim with the Majestic-12 Distributed Search Engine project is to create a viable competitor to Google. We use volunteers around the world to help us crawl and index the web data. This project was started in late 2004 and about 2 years later it became clear that we need to be as relevant as Google and in order to do that we have to master power of backlinks and anchor text. As time went on many hundreds of terabytes of data were crawled it also became clear that we need to earn money as well in order to sustain our project. It took well over a year to actually achieve the level that we felt confident with to release it publicly in early 2008.

What were the hardest parts about getting it up and running?

The most difficult part was to avoid the temptation to simplify the problem and focus on a small subset of data that is much smaller than that indexed by Google. It was felt that it would be a mistake as you can't really be sure that you have the same view of the web unless you are close to Google's scale.

Once it was decided to follow the hard path a lot of technical scalability problems had to be solved as well, and then deal with the financial aspect of storing insane amount of data using sane amount of hardware.

You use a distributed crawl, much like Grub did. What were some of the key points to get people to want to contribute to the project? How many servers are you running?

The people that joined our project did so because they felt that Google is quickly becoming a monopoly (this was back in 2004) and a viable alternative was necessary. We have over 100 regulars in our project that run distributed crawler and analyser on well over 150 distributed clients: all this allows us to crawl at sustained rate of around 500 Mbits.

Since we recently moved closer to commercial world with Majestic-SEO it was decided that our project participants will benefit from our success by virtue of share ownership - essentially project members are partners. It needs to be stressed here that our members did not join the project for financial reasons.

How often do you crawl? How often do you crawl pages that have not been updated recently?

We crawl every day around 200 mln urls. At the moment our main focus is to grow our database in order to catch up with Google (see analysis here), however we have dedicated some of our capacity to recrawls, in fact in February we should have new version of automatic recrawls of important pages (high ACRanked) released and this will allow to see competitor backlink building activity pretty quickly. Our beta daily updates feature shows new backlinks found in previous day for registered or purchased domains, this gives a chance to see new backlinks before we do full index update (around every 2 months time).

What is AC Rank? How does it compare to Google's PageRank?

ACRank is a very simple measure of how important a web page is based on number of unique domains linking to it. More information can be found here: http://www.majesticseo.com/glossary.php#ACRank

This measure is not as good as PageRank because it does not yet "flow" between pages. We are going to have much improved version of ACRank released soon.

Do you have any new features planned?

Can't stop thinking about them ;)

You allow people to export an amazing amount of data, but mostly in a spreadsheet basis on a per site basis. Have you thought about creating a web based or desktop interface where people can do advanced analysis?

We offer a web based interface to all this data with ability to quickly export it using CSV format.

For example, what if I wanted to know pages (or sites) that were linking to SearchEngineWatch.com AND SearchEngineLand.com but NOT linking to SeoBook.com AND have a minimum AC rank of 3 AND are not using nofollow. Doing something like that would be quite powerful, and given that you have already done the complex crawl I imagine adding a couple more filters on top should be doable. Another such feature that would be cool would be adding an Optilink-link anchor text analysis feature which allows users to break down the anchor text percentages.

We do have powerful options that enable our customers to slice and dice data in many ways, such as excluding backlinks marked as nofollow or only showing such backlinks, this applies to single domain analysis however, but something like what you describe in your example of interdomain linking will be possible soon.

Have customers shared with you creative ways to use Majestic SEO that you have not yet thought of?

We get good customer feedback and often implement customer requested features to make data analysis easier. As for new creative ways our customers prefer to keep them to themselves, but once you look at the data you might see one or two good strategies on how to use it. ;)

How big of an issue is duplicate content while crawling the web? How do you detect and filter duplicate content?

It is a very big issue for search engines (and thus us) as many pages are duplicate or near duplicate of each other, with very small changes that make it hard to detect them. We do not currently detect such pages (we crawl

pretty much everything) though we have a good idea how to do it and will implement it soon. Our reports tend to show data ordered by importance of the backlink, so often it is not an issue though it depends on backlinking profile of a particular site.

A lot of links are rented/bought, and many of these sources get filtered by Google. Does your link graph take into account any such editorial actions? If not, what advice would you give Majestic SEO users when describing desirable links vs undesirable ones?

At the moment our tools report factual information on where backlinks were found, we do not currently flag links as paid or not. This is something that humans are good with and computer algorithms ain't - that's why Google hates such paid links so much. We do have some ideas however on how to detect topically relevant backlinks (paid would usually come from irrelevant sites) - it's coming soon and might actually turn up to be a ground breaking feature!

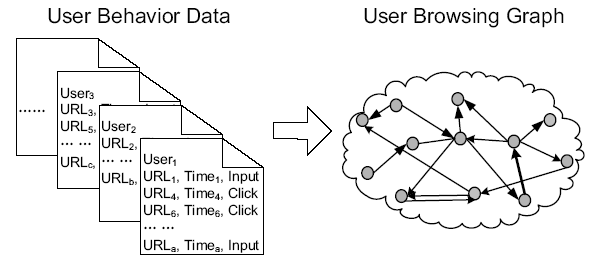

Microsoft has done research on BrowseRank, which is a system of using usage data to augment or replace link data. Do you feel such a system is viable? If search engines incorporate usage data will links still be the backbone of relevancy algorithms?

BrowseRank is a very interesting concept, though we are yet to see practical implementation on large scale web engine. I don't think such system obsoletes link data at all, in fact it is based on link data just like PageRank only it allows to detect the most relevant outgoing links on a page, essentially such votes should be given more weight in PageRank-like analysis. For example imagine that this very interview page is analysed using BrowseRank and it finds that the following cleverly crafted link to Majestic-SEO homepage is clicked a lot, then such link could be judged as the real vote that this page gives out!

This approach would help identify more important parts of on page content as well so that keyword matches within this content block could get higher score in ranking algorithms. So I actually think there is a lot of mileage in BrowseRank concept, but it would be a mistake to think that it will completely replace need for link data analysis. I am pretty sure Google uses something like this already - Google Toolbar stats would give them all they need to know.

The great irony in my view is that Microsoft lacks good web graph data to apply their browsing concept, this is probably why they are so desperate to buy Yahoo search operations who are much better than it comes to backlinks analysis, though Google are the real masters. Majestic-SEO is trying to slot itself just behind Google and who knows what happens after it ;)

I look up a competing site and see that a competitor has 150,000 more links than I do and feel that it would take years to catch up. Would you suggest I look into other keywords & markets, or what tricks and ideas do you have for how to compete using fewer links, or what strategies do you find effective for building bulk links?

First of all: don't panic! :)

Secondly use the SEO Toolbar that will query our Majestic-SEO database to show number of referring domains - it may well be very few.

Thirdly consider investing into detailed stats we have on this domain: this will tell you anchor text used, actual backlinks that you can analyse by their importance (we measure it using ACRank). Once you see real data a lot of things can become clear: for example you can see that your competitor has got lots of backlinks pointing just to homepage or spread around the site. Seeing actual anchor text is really an eye opener - it can show which keywords site was optimised for, this will allow you to make a good decision whether you can catch up or not. Chances are you may find that your competitor is weak for some keywords, this is where keywords research tool like Wordtracker is invaluable.

And finally consider that a few good relevant backlinks are likely to be worth more than many irrelevant ones: it is those backlinks that you want to get and knowing where your competitor got them should help you create a well targeted strategy.

You allow people to download a free link report for their site. How does this report compare to other link sources (Yahoo! Site Explorer, Alexa links, Google link:, and links in Google Webmaster Central)?

We give free access to verified websites, this is a great way to try our system and you might see the backlinks that you won't find elsewhere because our index is so large and we show you all backlinks (rather than top 1000) that we've got: this will include backlinks from "bad neighbourhoods" (this is not yet automatically marked by our system, but visual human analysis wins the day here) that you may not be shown in other sources.

We believe that our free access reports are the best in class, since it's free why not find out for yourself?

For analyzing third party sites you have a credit based system. How much does it cost to analyze an average site?

The price depends on how large (in terms of external referring domains) a particular website is. We have some sites that have hundreds of millions of backlinks, average would be very different depending on what you really after, the best option is just to run searches for domains that you interested in on our website, this will give you very interesting free information as well as price for full data access.

For a domain like Wikipedia I might only want the links to a specific page. Are you thinking about offering page level reports?

Yep I am thinking of it - I actually had requests like this, funnily Wikipedia being the main object of interest.

What is the maximum number of links can we download in 1 report?

Our web reporting system tries to focus on most valuable backlinks to avoid information overload, however we allow complete dataset download that will include all backlinks - some of our clients have retrieved data on domains with well over 100 mln backlinks! Using our powerful analysis options you can focus on backlinks for particular urls coming from particular pages and retrieve all qualifying data.

------

Thanks Alex. For more information on Majestic SEO please visit their site and look up your domain.

Comments

Very nice pick, Aaron, and thanks to Alex too.

I feel MajesticSEO doesn't get quite the exposure in the SEO community that it deserves. I have one strong suggestion for them. While I do realize they have got huge costs to cover, their current offering of purchasing credits seems more targeted towards individual webmasters. I mean if I am competing with a Wikipedia page, I would perhaps buy credits to see its backlink profile.

But some professional SEO would usually want to conduct many many backlink count reports for multiple sites, some for his existing clients, some for his potential clients, and some for just research and analysis of how much weight is being given by Google currently.

So if MajesticSEO can perhaps introduce a monthly subscription that would allow members to conduct unlimited (or a great number of) detailed backlink analysis, that may bring them more money to cover their costs. I am not much technical but if they already have to crawl and make a database, and if their cost of generating a single backlink report by pulling that data is trivial, then this may make sense for them.

I agree...which is a big part of why I wanted to make sure I gave them some good coverage. I think in part it comes down to them not being very aggressive at marketing, and part of it comes down to the site design not being as remarkable as the underlying data is.

I agree - I tried to plug Majestic SEO as much as possible during PubCon as it's kind of overshadowed by Linkscape. One of the coolest experiences I've had with Majestic was when it gave me data on a competitor who was cloaking links by generating duplicate content sites that had the robots "noindex,follow" tag. Each site linked to their core domain but the links only appeared if the UA was G. Linkscape never picked up these links nor did YSE as the UA must be Googlebot for them to be displayed. M12 picked em up tho!

Add new comment