I'd like to take a look at an area often overlooked in SEO.

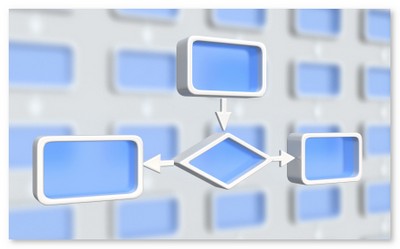

Site architecture.

Site architecture is important for SEO for three main reasons:

- To focus on the most important keyword terms

- Control the flow of link equity around the site

- Ensure spiders can crawl the site

Simple, eh. Yet many webmasters get it wrong.

Let's take a look at how to do it properly.

Evaluate The Competition

One you've decided on your message, and your plan, the next step is to layout your site structure.

Start by evaluating your competition. Grab your list of keyword terms, and search for the most popular sites listed under those terms. Take a look at their navigation. What topic areas do they use for their main navigation scheme? Do they use secondary navigation? Are there similarities in topic areas across competitor sites?

Open a spreadsheet, and list their categories, and title tags, and look for keyword patterns. You'll soon see similarities. By evaluating the navigation used by your competition, you'll get a good feel for the tried-n-true "money" topics.

You can then run these sites through metrics sites like Compete.com.

Use the most common, heavily trafficked areas as your core navigation sections.

The Home Page Advantage

Those who know how Page Rank functions can skip this section.

Your home page will almost certainly have the highest level of authority.

While there are a lot of debates about the merits of PageRank when it comes to ranking, it is fair to say that PageRank is rough indicator of a pages' level of authority. Pages with more authority are spidered more frequently and enjoy higher ranking than pages with lower authority. The home page is often the page with the most links pointing to it, so the home page typically has the highest level of authority. Authority passes from one page to the next.

For each link off a page, the authority level will be split.

For example - and I'm simplifying* greatly for the purposes of illustration - if you have a home page with a ten units of link juice, two links to two sub-pages would see each sub-page receive 5 points of link juice. If the sub-page has two links, each sub-sub would receive two units of link juice, and so on.

The important point to understand is that the further your pages are away from the home page, generally the less link juice those pages will have, unless they are linked from external pages. This is why you need to think carefully about site structure.

For SEO purposes, try to keep your money areas close to the home page.

*Note: Those who know how Page Rank functions will realise my explaination above is not technically correct. The way Page Rank splits is more sophisticated than that given in my illustration. For those who want a more technical breakdown of the Page Rank calculations, check out Phils post at WebWorkshop.

How Deep Do I Go?

Keeping your site structure shallow is a good rule of thumb. So long as you main page is linked well, all your internal pages will have sufficient authority to be crawled regularly. You also achieve clarity and focus.

A shallow site structure is not just about facilitating crawling. After all, you could just create a Google Site Map and achieve the same goal. Site structure is also about selectively passing authority to your money pages, and not wasting it on pages less deserving. This is straightforward with a small site, but the problem gets more challenging as you site grows.

One way to mange scale is by grouping your keyword terms into primary and secondary navigation.

Main & Secondary Navigation

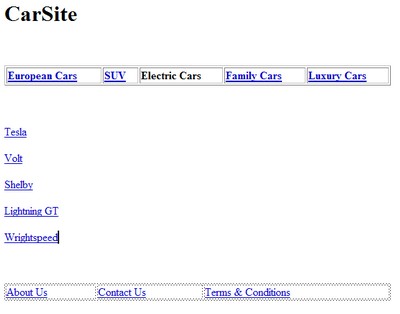

Main navigation is where you place your core topics i.e. the most common, highly trafficked topics you found when you performed your competitive analysis. Typically, people use tabs across the top, or a list down the left hand side of the screen. Main navigation appears on all other pages.

Secondary navigation consists of all other links, such as latest post, related articles, etc. Secondary navigation does not appear on every page, but is related to the core page upon which it appears.

One way to split navigation is to organize your core areas into the main navigation tabs across the top, and provide secondary navigation down the side.

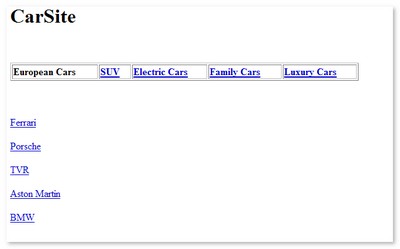

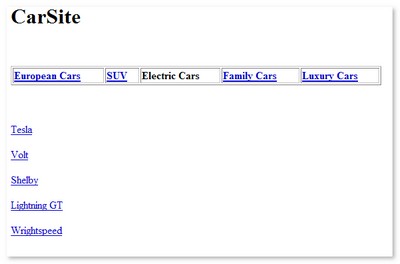

For example, let's say you main navigation layout looked like this:

Each time I click a main navigation term, the secondary navigation down the left hand side changes. The secondary navigation are keywords related to the core area.

For those of you who are members, Aaron has an indepth video demonstration on Site Architecture And Internal Linking, as well as instruction on how to integrate and mange keywords.

Make Navigation Usable

Various studies indicate that humans are easily confused when presented with more than seven choices. Keep this in mind when creating your core navigation areas.

If you offer more than seven choices, find ways to break things down further. For example, by year, manufacturer, model, classification, etc.

You can also break these areas down with an "eye break" between each. Here's a good example of this technique on Chocolate.com:

Search spiders, on the other hand, aren't confused by multiple choices. Secondary navigation, which includes links within the body copy, provides plenty of opportunity to place keywords in links. Good for usability, too.

As your site grows, new content is linked to by secondary navigation. The key is to continually monitor what content produces the most money/visitor response. Elevate successful topics higher up you navigation tree, and relegate loss-making topics.

Use your analytics package to do this. In most packages, you can get breakdowns of the most popular, and least popular, pages. Organise this list by "most popular". Your most popular pages should be at the top of your navigation tree. You also need to consider your business objectives. Your money pages might not be the same pages as your most popular pages, so it's also a good idea to set up funnel tracking to ensure the pages you're elevating also align with your business goals.

If a page is ranking well for a term, and that page is getting good results, you might want to consider adding a second page targeting the same term. Google may then group the pages together, effectively giving you listings #1 and #2.

Subject Themeing

A variant on Main & Secondary Navigation is subject themeing.

Themeing is a controversial topic in SEO. The assumption is that the search engines will try and determine the general theme of your site, therefore you should keep all your pages based around a central theme.

The theory goes that you can find out what words Google places in the same "theme" by using the tilde ~ command in Google. For example, if you search on ~ cars, you'll see "automobile", "auto", "bmw" and other related terms highlighted in the SERP results. You use these terms as headings for pages in your site.

However, many people feel that themes do not work, because search engines return individual pages, not sites. Therefore, it follows that the topic of other pages on the site aren't directly attributable to the ranking of an individual page.

Without getting into a debate about the the existence or non-existence of theme evaluation in the algorithm, themeing is a great way to conceptually organize your site and research keywords.

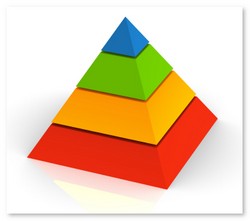

Establish a central theme, then create a list of sub-topics made up of related (~) terms. Make sub-topics of sub-topics. Eventually, your site resembles a pyramid structure. Each sub-topic is organized into a directory folder, which naturally "loads" keywords into URL strings, breadcrumb trails, etc. The entire site is made up of of keywords related to the main theme.

Bruce Clay provides a good overview of Subject Themeing.

Bleeding Page Rank?

You might also wish to balance the number of outgoing links with the number of internal links. Some people are concerned about this aspect, i.e. so-called "bleeding page rank". A page doesn't lose page rank because you link out, but linking does effect the level of page rank available to pass to other pages. This is also known as link equity.

It is good to be aware of this, but not let it dictate your course of action too much. Remember, outbound linking is a potential advertisement for your site, in the form of referral data in someone else logs. A good rule of thumb is to balance the number of internal links with the the number of external links. Personally, I ignore this aspect of SEO site construction and instead focus on providing visitor value.

Link Equity & No Follow

Another way to control the link equity that flows around your site is to use the no-follow tag. For example, check out the navigational links at the bottom of the page:

As these target pages aren't important in terms of ranking, you could no-follow these pages ensure your main links have more link equity to pass to other pages.

Re-Focus On The Most Important Content

This might sound like sacrilege, but it can often pay not to let search engines display all the pages in your site.

Let's say you have twenty pages, all titled "Acme". Links containing the keyword term "Acme" point to various pages. What does the algorithm do when faced with these pages? It doesn't display all of them for the keyword term "Acme". It choses the one page it considers most worthy, and displays that.

Rather than leave it all to the algorithm, it often pays to pick the single most relevant page you want to rank, and 301 all the other similarly-themed pages to point to it. Here's some instructions on how to 301 pages.

By doing this, you focus link equity on the most important page, rather than splitting it across multiple pages.

Create Cross Referenced Navigational Structures

Aaron has a good tip regarding cross-referencing within the secondary page body text. I'll repeat it here for good measure:

This idea may sound a bit complex until you visualize it as a keyword chart with an x and y axis.

Imagine that a, b, c, ... z are all good keywords.

Imagine that 1, 2, 3, ... 10 are all good keywords.

If you have a page on each subject consider placing the navigation for a through z in the sidebar while using links and brief descriptions for 1 through 10 as the content of the page. If people search for d7, or b9, that cross referencing page will be relevant for it, and if it is done well it does not look too spammy. Since these types of pages can spread link equity across so many pages of different categories make sure they are linked to well high up in the site's structure. These pages works especially well for categorized content cross referenced by locations.

Related Reading: