Alexa Site Audit Review

Alexa, a free and well-known website information tool, recently released a paid service.

For $199 per site Alexa will audit your site (up to 10,000 pages) and return a variety of different on-page reports relating to your SEO efforts.

It has a few off-page data points but it focuses mostly on your on-page optimization.

You can access Alexa's Site Audit Report here:

http://www.alexa.com/siteaudit

Report Sections

Alexa's Site Audit Report breaks the information down into 6 different sections (some which have additional sub-sections as well)

- Overview

- Crawl Coverage

- Reputation

- Page Optimization

- Keywords

- Stats

The sections break down as follows:

So we ran Seobook.com through the tool to test it out :)

Generally these reports take about a day or two, ours had some type of processing error so it took about a week.

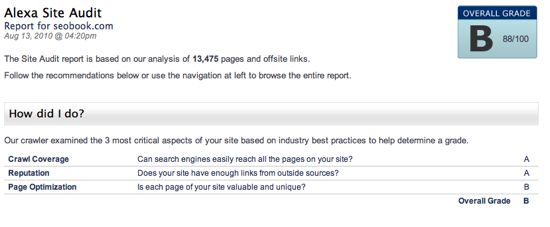

Overview

The first section you'll see is the number of pages crawled, followed by 3 "critical" aspects of the site (Crawl Coverage, Reputation, and Page Optimization). All three have their own report sections as well. Looks like we got an 88. Excuse me, but shouldn't that be a B+? :)

So it looks like we did just fine on Crawl Coverage and Reputation, but have some work to do with Page Optimization.

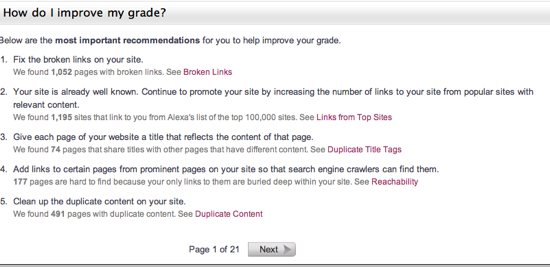

The next section on the overview page is 5 recommendations on how to improve your site, with links to those specific report sections as well. At the bottom you can scroll to the next page or use the side navigation. We'll investigate these report sections individually but I think the overview page is helpful in getting a high-level overview of what's going on with the site.

Crawl Coverage

This measures the "crawl-ability" of the site, internal links, your robots.txt file, as well as any redirects or server errors.

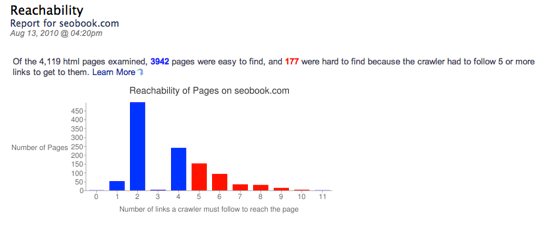

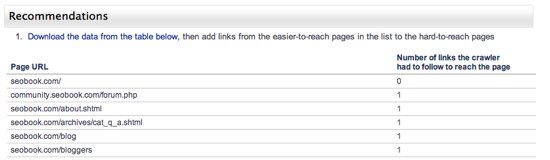

Reachability

The Reachability report shows you a break down of what HTML pages were easy to reach versus which ones were not so easy to each. Essentially for our site, the break down is:

- Easy to find - 4 or less links a crawler must follow to get to a page

- Hard to find - more than 4 links a crawler must follow to get to a page

The calculation is based on the following method used by Alexa in determining the path length specific to your site:

Our calculation of the optimal path length is based on the total number of pages on your site and a consideration of the number of clicks required to reach each page. Because optimally available sites tend to have a fan-out factor of at least ten unique links per page, our calculation is based on that model. When your site falls short of that minimum fan-out factor, crawlers will be less likely to index all of the pages on your site.

A neat feature in this report is the ability to download your URL's + the number of links the crawler had to follow to find the page in a .CSV format.

This is a useful feature for mid-large scale sites. You can get a decent handle on some internal linking issues you may have which could be affecting how relevant a search engine feels a particular page might be. Also, this report can spot some weaknesses in your site's linking architecture from a usability standpoint.

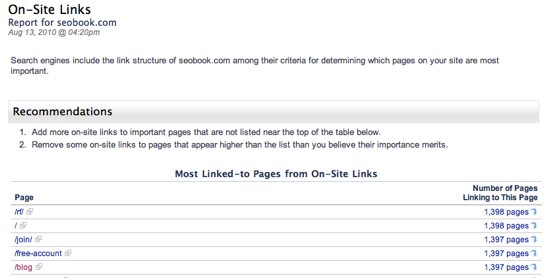

On-Site Links

While getting external links from unique domains is typically a stronger component to ranking a site it is important to have a strong internal linking plan as well. Internal links are important in a few ways:

- The only links where you can 100% control the anchor text (outside of your own sites of course, or sites owned by your friends)

- They can help you flow link equity to pages on your site that need an extra bit of juice to rank

- Users will appreciate a logical, clear internal navigation structure and you can use internal linking to get them to where you want them to go

Alexa will show you your top linked to (from internal links) pages:

You can also click the link to the right to expand and see the top ten pages that link to that page:

So if you are having problems trying to rank some sub-pages for core keywords or long-tail keywords, you can check the internal link counts (and see the top 10 linked from pages) and see if something is amiss with respect to your internal linking structure for a particular page.

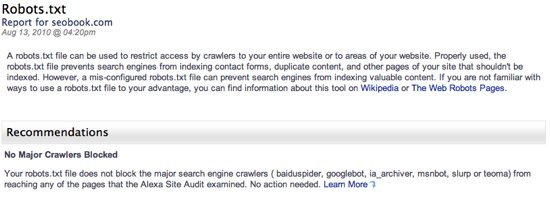

Robots.txt

Here you'll see if you've restricted access to these search engine crawlers:

- ia_archiver (Alexa)

- googlebot (Google)

- teoma (Ask)

- msnbot (Bing

- slurp (Yahoo)

- baiduspider (Baidu)

If you block out registration areas or other areas that are normally restricted, then the report will say that you are not blocking major crawlers but will show you the URL's you are blocking under that part of the report.

There is not much that is groundbreaking with Robots.Txt checks but it's another part of a site that you should check when doing an SEO review so it is a helpful piece of information.

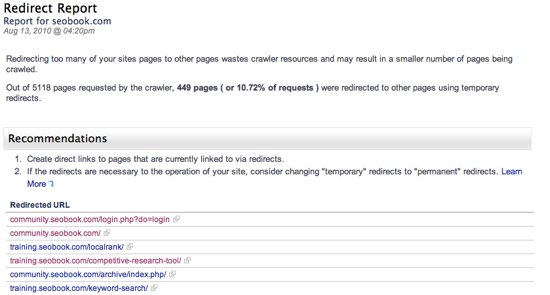

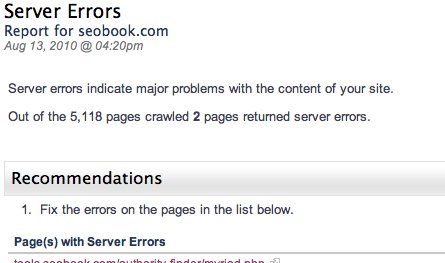

Redirects

We all know what happens when redirects go bad on a mid-large sized site :)

This report will show you what percentage of your crawled pages are being redirected to other pages with temporary redirects.

The thing with temporary redirects, like 302's, is that unlike 301's they do not pass any link juice so you should pay attention to this part of the report and see if any key pages are being redirected improperly.

Server Errors

This section of the report will show you any pages which have server errors.

Making sure your server is handling errors correctly (such as a 404) is certainly worthy of your attention.

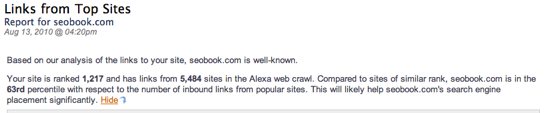

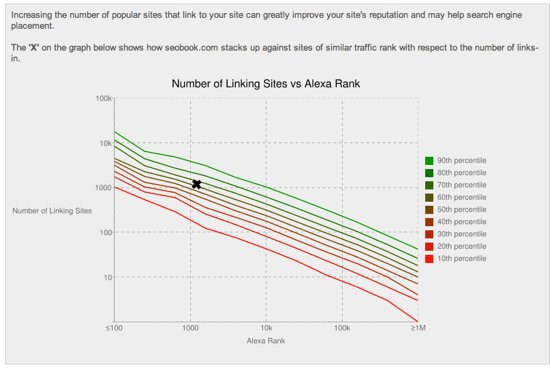

Reputation

The only part of this module is external links from authoritative sites and where your site ranks in conjunction with "similar sites" with respect to the number of sites linking to your sites and similar sites.

Links from Top Sites

The analysis is given based on the aforementioned forumla:

Then you are shown a chart which correlates to your site and related sites (according to Alexa) plus the total links pointing at each site which places the sites in a specific percentile based on links and Alexa Rank.

Since Alexa is heavily biased towards webmaster type sites based on their user base, these Alexa Rank's are probably higher than they should be but it's all relative since all sites are being judged on this measure.

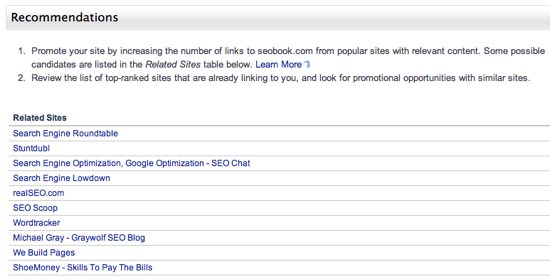

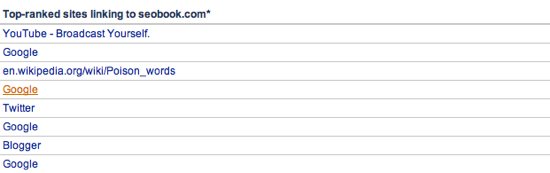

The Related Sites area is located below the chart:

Followed by the Top Ranked sites linking to your site:

I do not find this incredibly useful as a standalone measure of reputation. As mentioned, Alexa Rank can be off and I'd rather know where competing sites (and my site or sites) are ranking in terms of co-occurring keywords, unique domains linking, strength of the overall link profile, and so on as a measure of true relevance.

It is, however, another data point you can use in conjunction with other tools and methods to get a broader idea of your site and related sites compare.

Page Optimization

Checking the on-page aspects of a mid-large sized site can be pretty time consuming. Our Website Health Check Tool covers some of the major components (like duplicate/missing title tags, duplicate/missing meta descriptions, canonical issues, error handling responses, and multiple index page issues) but this module does some other things too.

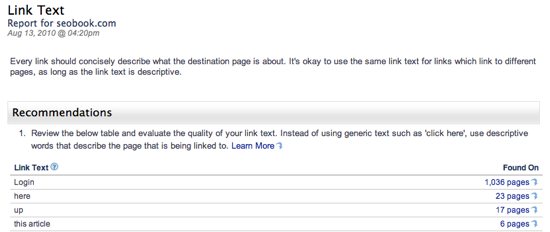

Link Text

The Link Text report shows a break down of your internal anchor text:

Click on the pages link and see the top pages using that anchor text to link to a page (shows the page the text is on as well as the page it links too):

The report is based on the pages it crawled so if you have a very large site or lots and lots of blog posts you might find this report lacking a bit in terms of breadth of coverage on your internal anchor text counts.

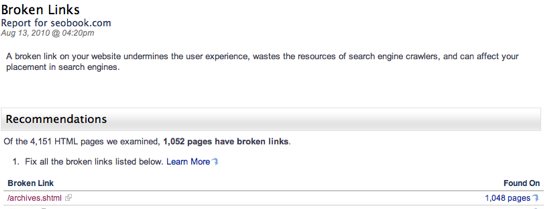

Broken Links

Checks broken links (internal and external) and groups them by page, which is an expandable option similar to the other reports:

Xenu is more comprehensive as a standalone tool for this kind of report (and for some of their other link reports as well).

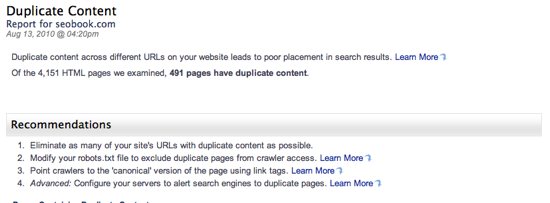

Duplicate Content

The Duplicate Content report groups all the pages that have the same content together and gives you some recommendations on things you can do to help with duplicate content like:

- Working with robots.txt

- How to use canonical tags

- Using HTTP headers to thwart duplicate content issues

Here is how they group items together:

Anything that can give you some decent insight into potential duplicate content issues (especially if you use a CMS) is a useful tool.

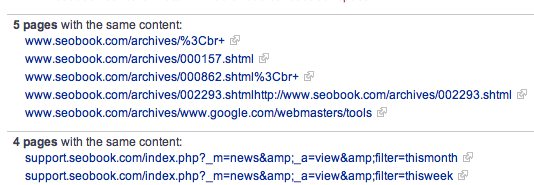

Duplicate Meta Descriptions

No duplicate meta descriptions here!

Fairly self-explanatory and while a meta description isn't incredibly powerful as standalone metric it does pay to make sure you have unique ones for your pages as every little bit helps!

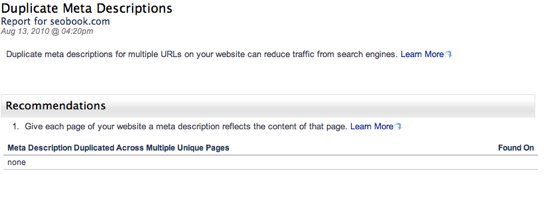

Duplicate Title Tags

You'll want to make sure you are using your title tags properly and not attacking the same keyword or keywords in multiple title tags on separate pages. Much like the other reports here, Alexa will group the duplicates together:

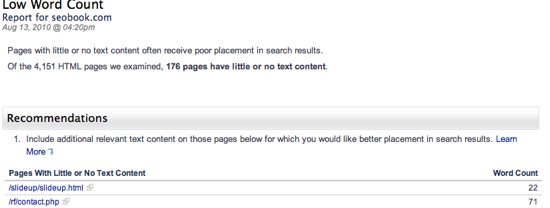

Low Word Count

Having a good amount of text on a page is good way to work in your core keywords as well as to help in ranking for longer tail keywords (which tend to drive lots of traffic to most sites). This report kicks out pages which have (in looking at the stats) less than 150 words or so on the page:

There's no real magic bullet for the amount of words you "should" have on a page. You want to have the right balance of word counts, images, and overall presentation components to make your site:

- Linkable

- Textually relevant for your core and related keywords

- Readable for humans

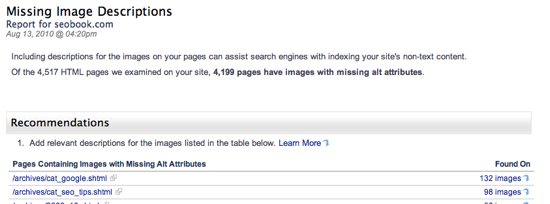

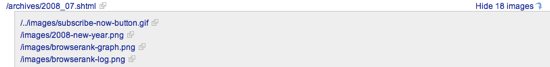

Image Descriptions

Continuing on with the "every little bit helps" mantra, you can see pages that have images with missing ALT attributes:

Alexa groups the images on per page, so just click the link to the right to expand the list:

Like meta descriptions, this is not a mega-important item as a standalone metric but it helps a bit and helps with image search.

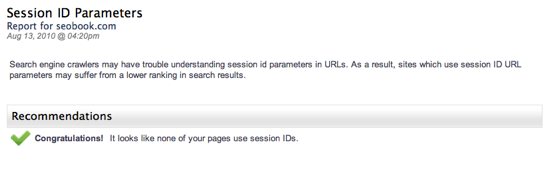

Session IDs

This report will show you any issues your site is having due to the use of session id's.

If you have issues with session id's and/or other URL parameters here you should take a look at using canonical tags or Google's parameter handling (mostly to increase the efficiency of your site's crawl by Googlebot, as Google will typically skip the crawling of pages based on your parameter list)

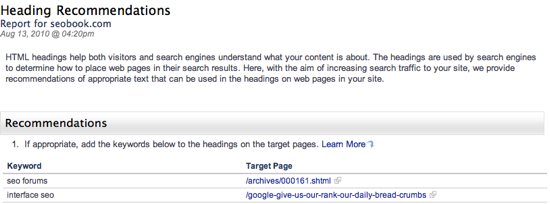

Heading Recommendations

Usually I cringe when I see automated SEO solutions. The headings section contains "recommended" headings for your pages. You can download the entire list in CSV format:

The second one listed, "interface seo", is on a page which talks about Google adding breadcrumbs to the search results. I do not think that is a good heading tag for this blog post. I suspect most of the automated tags are going to be average to less than average.

Keywords

Alexa's Keyword module offers recommended keywords to pursue as well as on site recommendations in the following sub-categories:

- Search Engine Marketing (keywords)

- Link Recommendations (on-site link recommendations

Search Engine Marketing

Based on your site's content Alexa offers up some keyword recommendations:

The metrics are defined as:

- Query - the proposed keyword

- Opportunity - (scales up to 1.0) based on expected search traffic to your site from keywords which have a low CPC. A higher value here typically means a higher query popularity and a low QCI. Essentially, the higher the number the better the relationship is between search volume, low CPC, and low ad competition.

- Query Popularity (scales up to 100) based on the frequency of searches for that keyword

- QCI - (scales up to 100) based on how many ads are showing across major search engines for the keyword

For me, it's another keyword source. The custom metrics are ok to look at but what disappoints me about this report is that they do not align the keywords to relevant pages. It would be nice to see "XYZ keywords might be good plays for page ABC based on ABC's content".

Link Recommendations

This is kind of an interesting report. You've got 3 sets of data here. The first is the "source page" and this is a listing of pages that, according to Alexa's crawl, are pages that appear to be important to search engines as well as pages that are easily crawled by crawlers:

These are pages Alexa feels should be pages you link from. The next 2 data sets are in the same table. They are "target pages" and keywords:

Some of the pages are similar but the attempt is to match up pages and predict the anchor text that should be used from the source page to the target page. It's a good idea but there's a bit of page overlap which detracts from the overall usefulness of the report IMO.

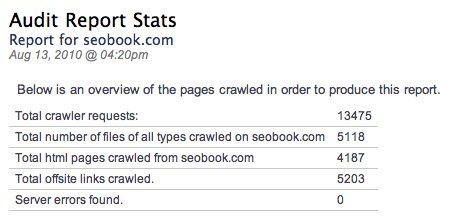

Stats

The Stats section offers 3 different reports:

- Report Stats - an overview of crawled pages

- Crawler Errors - errors Alexa encountered in crawling your site

- Unique Hosts Crawled - number of unique hosts (your domain and internal/external domains and sub-domains) Alexa encountered in crawling your site

Report Stats

An overview of crawl statistics:

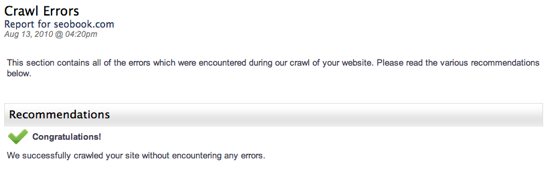

Crawler Errors

This is where Alexa would show what errors, if any, they encountered when crawling the site

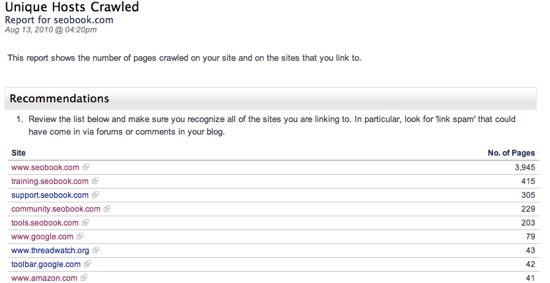

Unique Hosts Crawled

A report showing which sites you are linking to (as well as your own domain/subdomains)

Is it Worth $199?

Some of the report functionality is handled by free (in some cases) tools that are available to you. Xenu does a lot of what Alexa's link modules do and if you are a member here the Website Health Check Tool does some of the on-page stuff as well.

I would also like to see more export functionality especially in lieu of white label reporting. The crawling features are kind of interesting and the price point is fairly affordable as one time fee.

The Alexa Site Audit Report does offer some benefit IMO and the price point isn't overly cost-prohibitive but I wasn't really wowed by the report. If you are ok with spending $199 to get a broad overview of things then I think it's an ok investment. For larger sites sometimes finding (and fixing) only 1 or 2 major issues can be worth thousands in additional traffic.

It left me wanting a bit more though, so I might prefer to spend that $199 on links since most of the tool's functionality is available to me without dropping down the fee. Further, the new SEOmoz app also covers a lot of these features & is available at a monthly $99 price-point, while allowing you to run reports on up to 5 sites at a time. The other big thing for improving the value of the Alexa application would be if they allowed you to run a before and after report as part of their package. That way in-house SEOs can not only show their boss what was wrong, but can also use that same 3rd party tool as verification that it has been fixed.

Comments

Hey,

Thanks for doing a test run on the Alexa site audiit, for sharing an idea of what to expect and of your thoughts on what you got for your money.

Will help others shopping for a site audit and should help Alexa to consider improving their product, too.

Good stuff!

Take care.

Your conclusion was already the first thought that ran through my mind; SEOmoz' new web app is cheaper and offers way more for the money you give them.

I think they should face-palm themselves with this, Alexa that is.

I thought about getting an Alexa audit. If I can get the same things without dropping $199, then I would just put that money towards reputable SEO directories.

... $199 won't buy you too many listings. Some of the better known web directories charge $299 each (and in some cases that is recurring annual cost). The lower down the cost you go (say like the $5 or $20 links) the greater the likelihood that the sites over-represented in it's population (and thus your link co-citation if you list there) would be the types of sites which have accrued various Google penalties.

That could lead to a couple different ways to get penalized: either Google algorithmically determining which link sources most commonly link to poor quality sites & then either not counting those links or penalizing sites which get too high of a percent of their links from those sites, OR Google could use aggregate disavow data to create seed lists of sites to use to do the same sorts of things.

Add new comment