Triple Your Google AdWords CTR Overnight by Doing Nothing, Guaranteed!

I have seen many ad studies that empirically proved that the person doing the study did not collect enough data to publish their findings as irrefutable facts. While recently split testing my new and old landing pages I came across an example that collected far more data than many of these studies do:

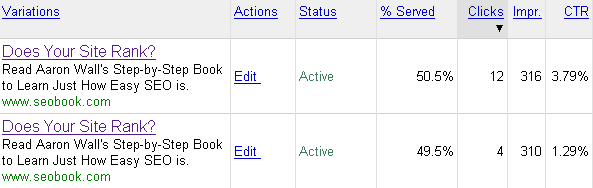

Same ad title. Same ad copy. Same display URL. Same keyword. And yet one has 3x the CTR as the other.

If you collect enough small data samples you can prove whatever point you want to. And you may even believe you are being honest when you do so. Numbers don't lie, but they don't always tell the truth, either.

Want to know when your data is accurate? Create another ad in the split group that is an exact copy of one of the ads being tested. Once its variances go away with the other clone then the other split test data might be a bit more believable.

Comments

This might help.

Sample size calculator.

http://www.surveysystem.com/sscalc.htm

I use http://www.splittester.com/

There are definitely tricks that can help you significantly improve your CTR overnight. But I agree, statistical accuracy is important.

I usually use splittester too, BUT... plug in the info from Aaron's example, and my trusty tool says:

Guess I need to watch that 5% more closely and be more patient w/ my sample sizes. There goes another one of my shortcuts to thinking for myself.

Thanks... jerk

In the old days of statistics the rule of thumb was that 30 was a good number.

To further clarify... were both set up at the same time with the same pricing for content, and search?

The reason I ask is that I have campaigns with the same ads, but I happen to get higher click through rate on content targeting. And if I set up a new ad, it takes some time for content targeting to start.

Both set up at the same time, and Google search delivery...no content targeting.

I am using splittester too but do not based my decision on it alone. Sometimes I wait until the ads get 30 to 50 clicks before I decide to do anything.

You gotta remember that those ads were shown for different keyword queries. Your example could prove something, if you only targeted these ads for one keyphrase. Otherwise, the studies with minimal data are worthless, as you said.

Aaron,

You are right when you write "Numbers don't lie, but they don't always tell the truth, either."

However we will never be able to know what is accurate (with a capital "A")... In the example you give, for the period when the ads were served (since Google was not serving the same ad at the same time) the data is accurate. One ad performed better than the other for the period when it was shown (I'm guessing here that even though both ads have the exact same everything... there is something in the Google system that labels them as different ads).

But that aside, we will never be able to know what is accurate until Google controls all media and can tell us

which ad performs better based on a common set of user behaviors in the research/purchase lifecycle before clicking on an ad.

So we actually will never know why one ad performs better than another... unless we know how many times someone has been exposed to your ad, how many times they have heard about your brand, how many other mediums they interacted with that reinforced your brand and what happened in their lives that made them say "Yes, I'll click now."

Better yet, what do you think are the ultimate information sources that an Adserving network could have access to and combine to determine (almost) ultimate accuracy in CTR?

BTW: I'm very tired... in the morning I may read this and thik "What the hell was I thinking when I wrote that rambling crap!"

Hi Aaron,

Total agree, these sort of small scale tests are pretty much useless. Too often i have seen conclusions draw from small sample sizes, or even worth from a keyword group which includes several keywords.

Aaron, was there only one keyword in the keyword group?

J.

Ok Aaron,

Admit it, you used the magic pixie dust on ad one and nothing on ad 2. It's pretty obvious you don't want to share it but do you really have to rub our noses in it?

That's awesome, Aaron. I would never had thought to test ads this way. Not sure how controlled the experiment was (Julian's point about keywords is an important one) but nonetheless, this type of testing should be done before coming to any specific conclusions.

I think it helps to turn off Google's Optimized delivery also. I get some funky results sometimes with it turned on, even when ad text is the same.

I'm pretty sure some people take ads that were displayed at different time periods and compare them as well. This is another thing that can screw up your data.

In one post I read, these people were convinced that leaving the words "up" and "on" uncapitalized doubled their clickthrough rate (or something close to this, can't remember the exact post). It's like come on.

They had an alright data set (better than the one you're listing above), but one ad had definitely been circulated more than the other.

I think if you're posting a new ad against an older ad, you might get some stuff going on there as well because it takes some time for Google to update quality scores. Don't you think?

betterppc.com is a great tool as well.

I just also made a comment on Shoemoney blog about his AdWords test.

My first impressions on your test are:

1. The samples (both numbers of impression & clicks) are very small.

2. It will take time for the ad distrubution on Google partners' sites.

3. There are many factors that effects the new ads.

The conclusion can't be based on these small samples and a short testing time.

Aaron, I think it's pre-matrue to make any real conclusion from a total of only 16 clicks. I wonder if you are going to re-look at this when you have collected a larger data sample.

"Same ad title. Same ad copy. Same display URL. Same keyword."

Did you use broad, phrase or exact matching for the keyword used?

How about negative keywords?

Keyword matching matters and can distort the outcome.

Google Optimizer will tell you when it thinks you have all the results you need for accurate decision.

This week it took about 1,200 impressions of a test with 3 versions before Google finally told me version 2 had a 100% chance of beating the other 2.

My test had a clear winner. I could have told you the winner at 300 impressions, but mathematically I would not be correct until enough tests had been run. What is the math Google Optimizer uses to determine a 100% winner? I don't know, but I bet it takes a but load more than 1,200 when the 2 competitors are extremely close. Luckily in my case, their was a clear winner.

Google's definition of "Chance to beat all"

The "chance to beat all" value is the probability that, as the experiment progresses, the given combination's mean conversion rate will beat that of all other combinations. When the probability is high, then the combination is a good candidate to adopt in your web page.

With CTR, what might be a factor is simply who sees the ads at what time of day. so if you have two identical ads, and one has a 3% higher CTR, perhaps it was because of WHO was doing the searching, not that there was something magical about one or the other. I think in certain groups of people there is some "ad blindness".

For example, if say, 5-6 pm has business owners searching, maybe you get a higher CTR, than if you have affiliates searching at 3AM. I wish Google would implement something to track this sort of thing. College kids searching for MP3 are going to have very different results from a 14 y/o searching for MP3. Anyone know of any third party tracking for this kind of thing?

I agree...it sounds like using exact match in all testing is the best strategy..do you agree?

If you were using anything other than exact, the keywords may not have been the same.

It was about 6 months ago, but I think that category was exact match or exact match + phrase match (no broad match).

Did you stop the test or did you accumulate more clicks and impressions? Did the results change?

Add new comment