The Search Engine Marketing Glossary

Navigation:

Feedback Why 0-9 A B C D E F G H I J K L M N O P Q R S T U V W X Y Z Other Glossaries

All of the definitions on this page are an internal anchor that link to themselves, thus if you wanted to link at the PageRank definition on this page you would scroll down to PageRank and click on it. Then in the address bar you would see http://www.seobook.com/glossary/#pagerank which links directly to that definition.

Why?

We are often asked what does this mean? and we received many comments requesting an SEO glossary. So we decided to create this page. :)

Personal experiences make everyone biased, but the bias in any of the following definitions in one which aims to skew toward blunt & honest (rather than wrapping things in a coat of political correctness and circular cross-referenced obscurity. There was no group think hidden agenda applied to this page, and any bias in it should be a known bias - mine. :) Here is a list of some of my known flaws and biases in my world view.

This page is licensed under a Creative Commons license. You can modify it, distribute it, sell it, and keep the rights to whatever you make. It is up to you however you want to use any of the content from this page.

This work is licensed under a Creative Commons Attribution 2.5 License.

Glossary:

0 - 9

Some poorly developed content management systems return 200 status codes even when a file does not exist. The proper response for file not found is a 404.

See also:

This is the preferred method of redirecting for most pages or websites. If you are going to move an entire site to a new location you may want to test moving a file or folder first, and then if that ranks well you may want to proceed with moving the entire site. Depending on your site authority and crawl frequency it may take anywhere from a few days to a month or so for the 301 redirect to be picked up.

See also:

- W3C HTTP 1.1 Status Code Definitions

- On Apache servers you can redirect URLs in a .htaccess file or via in the headers of some dynamic pages. Most web hosts run on Apache.

- On IIS servers you can redirect using ASP or ASP.net, or from within the internet manager.

Generally, as it relates to SEO, it is typically best to avoid using 302 redirects. Some search engines struggle with redirect handling. Due to poor processing of 302 redirects some search engines have allowed competing businesses to hijack the listings of competitors.

See also:

Some content management systems send 404 status codes when documents do exist. Ensure files that exist do give a 200 status code and requests for files that do not exist give a 404 status code. You may also want to check with your host to see if you can set up a custom 404 error page which makes it easy for site visitors to

- view your most popular and / or most relevant navigational options

- report navigational problems within your site

Search engines request a robots.txt file to see what portions of your site they are allowed to crawl. Many browsers request a favicon.ico file when loading your site. While neither of these files are necessary, creating them will help keep your log files clean so you can focus on whatever other errors your site might have.

See also:

A

See also:

- Google AdSense heat map - shows ad clickthrough rate estimates based on ad positioning.

Example absolute link

<a href="http://seobook.com/folder/filename.html">Cool Stuff</a>

Example relative link

<a href="../folder/filename.html">Cool Stuff</a>

See also:

- Google Inside Search: Page layout algorithm improvement

- Coalition for Better Ads - not-for-profit front group created by Google to promote Google's economic intererests by demonetizing competing publishers and ad networks

- RankRanger: The Wrath of the May 2020 Core Update and the Lessons Learned - analysis of an update noting

- Chromium Blog: Protecting against resource-heavy ads in Chrome

See also:

Ad Retargeting (see retargeting)

While it has a few cool features (including dayparting and demographic based bidding) it is still quite nascent in nature compared to Google AdWords. Due to Microsoft's limited marketshare and program newness many terms are vastly underpriced and present a great arbitrage opportunity.

AdSense offers a highly scalable automated ad revenue stream which will help some publishers establish a baseline for the value of their ad inventory. In many cases AdSense will be underpriced, but that is the trade off for automating ad sales.

AdSense ad auction formats include

- cost per click - advertisers are only charged when ads are clicked on

- CPM - advertisers are charged a certain amount per ad impression. Advertisers can target sites based on keyword, category, or demographic information.

AdSense ad formats include

- text

- graphic

- animated graphics

- videos

In some cases I have seen ads which got a 2 or 3% click through rate (CTR), while sites that are optimized for maximum CTR (through aggressive ad integration) can obtain as high as a 50 or 60% CTR depending on

- how niche their site is

- how commercially oriented their site is

- the relevancy and depth of advertisers in their vertical

It is also worth pointing out that if you are too aggressive in monetizing your site before it has built up adequate authority your site may never gain enough authority to become highly profitable.

Depending on your vertical your most efficient monetization model may be any of the following

- AdSense

- affiliate marketing

- direct ad sales

- selling your own products and services

- a mixture of the above

See also:

- Google AdSense program - sign up as an ad publisher

- Google AdSense heat map - shows ad clickthrough rate estimates based on ad positioning.

- Google AdWords - buy ads on Google search and / or contextually relevant web pages.

AdWords is an increasingly complex marketplace. One could write a 300 page book just covering AdWords. Rather than doing that here I thought it would be useful to link to many relevant resources.

See also:

- Google AdWords - sign up for an advertiser account

- Google Advertising Professional Program - program for qualifying as an AdWords expert

- Google AdWords Learning Center - text and multimedia educational modules. Contains quizzes related to each section.

- AdWords Keyword Tool - shows related keywords, advertiser competition, and relative search volume estimates.

- Google Traffic Estimator - estimates bid prices and search volumes for keywords.

- Free PPC tips [PDF] - my ebook offering free pay per click advice.

- Andrew Goodman's Google AdWords Handbook - costs roughly $75, but is well worth it

Ad Heavy Page Layout (see Top Heavy)

Most affiliates make next to nothing because they are not aggressive marketers, have no real focus, fall for wasting money on instant wealth programs that lead them to buying a bunch of unneeded garbage via other's affiliate links, and do not attempt to create any real value.

Some power affiliates make hundreds of thousands or millions of dollars per year because they are heavily focused on automation and/or tap large traffic streams. Typically niche affiliate sites make more per unit effort than overtly broad ones because they are easier to focus (and thus have a higher conversion rate).

Selling a conversion is typically harder than selling a click (like AdSense does, for instance). Search engines are increasingly looking to remove the noise low quality thin affiliate sites ad to the search results through the use of

- algorithms which detect thin affiliate sites and duplicate content;

- manual review; and,

- implementation of landing page quality scores on their paid ads.

See also:

- Commission Junction - probably the largest affiliate network

- Linkshare - another large affiliate network

- Performics - another large affiliate network

- Azoogle Ads - ad offer network focused on high margin / high profit verticals

- CPA Empire - similar to AzoogleAds

- Amazon Associates - Amazon's affiliate program

- Clickbank - an affiliate network for selling electronic products and information

Fresh content which is also cited on many other channels (like related blogs) will temporarily rank better than you might expect because many of the other channels which cite the content will cite it off their home page or a well trusted high PageRank page. After those sites publish more content and the reference page falls into their archives those links are typically from pages which do not have as much link authority as their home pages.

Some search engines may also try to classify sites to understand what type of sites they are, as in news sites or reference sites that do not need updated that often. They may also look at individual pages and try to classify them based on how frequently they change.

See also:

- Google Patent 20050071741: Information retrieval based on historical data - mentions that document age, link age, link bursts, and link churn may be used to help score the relevancy of a document.

Alexa is heavily biased toward sites that focus on marketing and webmaster communities. While not being highly accurate it is free.

See also

See also:

Example usage

<img src="http://www.seobook.com/images/whammy.gif" height="140" width="120" alt="Press Your Luck Whammy." />

See also

See also:

See also:

- Amazon.com - official site

See also:

- AMP.dev - official home of the project

Ad networks are a game of margins. Marketers who track user action will have a distinct advantage over those who do not.

See also:

- Google Analytics - Google's free analytics program

- Conversion Ruler - a simple and cheap web based analytic tool

- ClickTracks - downloadable and web based analytics software

Search engines assume that your page is authoritative for the words that people include in links pointing at your site. When links occur naturally they typically have a wide array of anchor text combinations. Too much similar anchor text may be a considered a sign of manipulation, and thus discounted or filtered. Make sure when you are building links that you control that you try to mix up your anchor text.

Example of anchor text:

<a href="http://www.seobook.com/">Search Engine Optimization Blog</a>

Outside of your core brand terms if you are targeting Google you probably do not want any more than 10% to 20% of your anchor text to be the same. You can use Backlink Analyzer to compare the anchor text profile of other top ranked competing sites.

See also:

- Backlink Analyzer - free tool to analyze your link anchor text

While Google pitches Android as being an "open" operating system, they are only open in markets they are losing & once they establish dominace they shift from open to closed by added many new restrictions on "partners."

See also:

- Wolf-Howl: AdSense Arbitrage: Tips, Tricks & Secrets

- [audio] Jeremy Shoemaker interviews Kris Jones and part 2

- Wikipedia: arbitrage

See also:

See also:

- Ask

- Ask Sponsored Listings - Ask syndicates AdWords ads, but also sells internal pay per click ads as well

- Ask Webmaster Help

Search engines constantly tweak their algorithms to try to balance relevancy algorithms based on topical authority and overall authority across the entire web. Sites may be considered topical authorities or general authorities. For example, Wikipedia and DMOZ are considered broad general authority sites. This site is a topical authority on SEO, but not a broad general authority.

Example potential topical authorities:

- the largest brands in your field

- the top blogger talking about your subject

- the Wikipedia or DMOZ page about your topic

See also:

- Mike Grehan on Topic Distillation [PDF]

- Jon Klienberg's Authoritative sources in a hyperlinked environment [PDF]

- Jon Klienberg's home page

- Hypersearching the Web

See also:

If you want to program internal bid management software you can get a developer token to use the Google AdWords API.

A few popular bid management tools are

- Atlas OnePoint (formerly known as GoToast)

- BidRank

- KeywordMax

B

Backlink (see Inbound Link)

It is generally easier to get links to informational websites than commercial sites. Some new sites might gain authority much quicker if they tried looking noncommercial and gaining influence before trying to monetize their market position.

In many ways text ads are successful because they are more relevant and look more like content, but with the recent surge in the popularity of text ads some have speculated that in time people may eventually become text ad blind as well.

Nick Denton stated:

Imagine a web in which Google and Overture text ads are everywhere . Not only beside search results, but next to every article and weblog post. Ubiquity breeds contempt. Text ads, coupled with content targeting, are more effective than graphic ads for many advertisers; but they too, like banners, will suffer reader burnout.

See also:

- Searchblog - blog about the intersection of search, media, and technology.

- The Search - John's book about the history and future of search.

- The Database of Intentions - post about how search engines store many of our thoughts

- Web 2.0 Conference - conference run by John Battelle.

- Battelle's Google speech

See also:

- The Keyword: Understanding searches better than ever before

- SearchEngineLand BERT FAQ

- Google AI Blog: Open Sourcing BERT: State-of-the-Art Pre-training for Natural Language Processing

Any media channel, publishing format, organization, or person is biased by

- how and why they were created and their own experiences

- the current set of social standards in which they exist

- other markets they operate in

- the need for self preservation

- how they interface with the world around them

- their capital, knowledge, status, or technological advantages and limitations

Search engines aim to be relevant to users, but they also need to be profitable. Since search engines sell commercial ads some of the largest search engines may bias their organic search results toward informational (ie: non-commercial) websites. Some search engines are also biased toward information which has been published online for a great deal of time and is heavily cited.

Search personalization biases our search results based on our own media consumption and searching habits.

Large news organizations tend to aim for widely acceptable neutrality rather than objectivity. Some of the most popular individual web authors / publishers tend to be quite biased in nature. Rather than bias hurting one's exposure

- The known / learned bias of a specific author may make their news more appealing than news from an organization that aimed to seem arbitrarily neutral.

- I believe biased channels most likely typically have a larger readership than unbiased channels.

- Most people prefer to subscribe to media which matches their own biases worldview.

- If more people read what you write and passionately agree with it then they are more likely to link at it.

- Things which are biased in nature are typically easier to be cited than things which are unbiased.

See also:

- Alejandro M. Diaz's Through the Google Goggles [PDF] - thesis paper on Google's biases

- A Thousand Years of Nonlinear History - looks at economic, biological, and linguistic history

- Manufacturing Consent - Noam Chomsky DVD and book about mainstream media bias toward business interests

- Comparison of the major search algorithms

- Wikipedia: Bias

Bid Management Software (see Automated Bid Management Software)

See also:

Search engines are not without flaws in their business models, but there is nothing immoral or illegal about testing search algorithms to understand how search engines work.

People who have extensively tested search algorithms are probably more competent and more knowledgeable search marketers than those who give themselves the arbitrary label of white hat SEOs while calling others black hat SEOs.

When making large investments in processes that are not entirely clear trust is important. Rather than looking for reasons to not work with an SEO it is best to look for signs of trust in a person you would like to work with.

See also:

- Black Hat SEO.com - parody site about black hat SEO

- White Hat SEO.com - parody site about white hat SEO

- Honest SEO - site offering tips on hiring an SEO

- SEO Black Hat - blog about black hat SEO techniques

Block level link analysis can be used to help determine if content is page specific or part of a navigational system. It also can help determine if a link is a natural editorial link, what other links that link should be associated with, and/or if it is an advertisement. Search engines generally do not want to count advertisements as votes.

See also

Most blogs tend to be personal in nature. Blogs are generally quite authoritative with heavy link equity because they give people a reason to frequently come back to their site, read their content, and link to whatever they think is interesting.

The most popular blogging platforms are Wordpress, Blogger, Movable Type, and Typepad.

Automated blog spam:

Nice post!

by

Discreat Overnight Viagra Online Canadian Pharmacy Free Shipping

Manual blog spam:

I just wrote about this on my site. I don't know you, but I thought I would add no value to your site other than linking through to mine. Check it out!!!!!

by

cluebag manual spammer (usually with keywords as my name)

As time passes both manual and automated blog comment spam systems are evolving to look more like legitimate comments. I have seen some automated blog comment spam systems that have multiple fake personas that converse with one another.

It allows you to publish sites on a subdomain off of Blogspot.com, or to FTP content to your own domain. If you are serious about building a brand or making money online you should publish your content to your own domain because it can be hard to reclaim a website's link equity and age related trust if you have built years of link equity into a subdomain on someone else's website.

Blogger is probably the easiest blogging software tool to use, but it lacks many some features present in other blog platforms.

See also:

Example use:

- <b>words</b>

- <strong>words</strong>

Either would appear as words.

Social bookmarking sites are often called tagging sites. Del.icio.us is the most popular social bookmarking site. Yahoo! MyWeb also allows you to tag results. Google allows you to share feeds and / or tag pages. They also have a program called Google Notebook which allows you to write mini guides of related links and information.

There are also a couple meta news sites that allow you to tag interesting pages. If enough people vote for your story then your story gets featured on the homepage. Slashdot is a tech news site primarily driven by central editors. Digg created a site covering the same type of news, but is a bottoms up news site which allows readers to vote for what they think is interesting. Netscape cloned the Digg business model and content model. Sites like Digg and Netscape are easy sources of links if you can create content that would appeal to those audiences.

Many forms of vertical search, like Google Video or YouTube, allow you to tag content.

See also:

- Del.icio.us - Yahoo! owned social bookmarking site

- Yahoo! MyWeb - similar to Del.icio.us, but more integrated into Yahoo!

- Google Notebook - allows you to note documents

- Slashdot - tech news site where stories are approved by central editors

- Digg - decentralized news site

- Netscape - Digg clone

- Google Video - Google's video hosting, tagging, and search site

- YouTube - popular decentralized video site

Examples:

- A Google search for SEO Book will return results for SEO AND Book.

- A Google search for "SEO Book" will return results for the phrase SEO Book.

- A Google search for SEO Book -Jorge will return results containing SEO AND Book but NOT Jorge.

- A Google search for ~SEO -SEO will find results with words related to SEO that do not contain SEO.

Some search engines also allow you to search for other unique patterns or filtering ideas. Examples:

- A numerical range: 12...18 would search for numbers between 12 and 18.

- Recently updated: seo {frsh=100} would find recently updated documents. MSN search also lets you place more weight on local documents

- Related documents: related:www.threadwatch.org would find documents related to Threadwatch.

- Filetype: AdWords filetype:PDF would search for PDFs that mentioned AdWords.

- Domain Extension: SEO inurl:.edu

- IP Address: IP:64.111.97.133

See also:

- Search Engine Showdown Features Chart - Greg R.Notess's comparison of features at major search engines.

A brand is built through controlling customer expectations and the social interactions between customers. Building a brand is what allows businesses to move away from commodity based pricing and move toward higher margin value based pricing. Search engines may look at signals like repeat website visits & visits to a site based on keywords associated with a known entity or a navigational search query (like searching for a URL) and use them for relevancy signals in algorithms like Panda.

See also:

- Rob Frankel - branding expert who provides free branding question answers every Monday. He also offers Frankel's Laws of Big Time Branding™, blogs, and wrote the branding book titled The Revenge of Brand X.

Some affiliate marketing programs prevent affiliates from bidding on the core brand related keywords, while others actively encourage it. Either way can work depending on the business model, but it is important to ensure there is synergy between internal marketing and affiliate marketing programs.

Example breadcrumb navigation:

Home > SEO Tools > SEO for Firefox

Whatever page the user is on is unlinked, but the pages above it within the site structure are linked to, and organized starting with the home page, right on down through the site structure.

See also:

Links may broken for a number of reason, but four of the most common reasons are

- a website going offline

- linking to content which is temporary in nature (due to licensing structures or other reasons)

- moving a page's location

- changing a domain's content management system

Most large websites have some broken links, but if too many of a site's links are broken it may be an indication of outdated content, and it may provide website users with a poor user experience. Both of which may cause search engines to rank a page as being less relevant.

Xenu Link Sleuth is a free software program which crawls websites to find broken links.

The most popular browsers are Microsoft's Internet Explorer, Mozilla's Firefox, Safari, and Opera.

See also:

The buying cycle may consist of the following stages

- Problem Discovery: prospect discovers a need or want.

- Search: after discovering a problem look for ways to solve the need or want. These searches may contain words which revolve around the core problem the prospect is trying to solve or words associated with their identity.

- Evaluate: may do comparison searches to compare different models, and also search for negative information like product sucks, etc.

- Decide: look for information which reinforces your view of product or service you decided upon

- Purchase: may search for shipping related information or other price related searches. purchases may also occur offline

- Reevaluate: some people leave feedback on their purchases . If a person is enthusiastic about your brand they may cut your marketing costs by providing free highly trusted word of mouth marketing.

See also:

Waiting for Your Cat to Bark? - book by Brian & Jeffrey Eisenberg about the buying cycle and Persuading Customers When They Ignore Marketing.

C

Some search engines provide links to cached versions of pages in their search results, and allow you to strip some of the formatting from cached copies of pages.

See also:

- Calacanis.com - Jason's blog

Webmasters should use consistent linking structures throughout their sites to ensure that they funnel the maximum amount of PageRank at the URLs they want indexed. When linking to the root level of a site or a folder index it is best to end the link location at a / instead of placing the index.html or default.asp filename in the URL.

Examples of URLs which may contain the same information in spite of being at different web addresses:

- http://www.seobook.com/

- http://www.seobook.com/index.shtml

- http://seobook.com/

- http://seobook.com/index.shtml

- http://www.seobook.com/?tracking-code

See also:

Catalog (see Index)

Google ensured Microsoft's Internet Explorer was fined in Europe & then bundled Chrome in Adobe Flash security updates to install Chrome bundleware on hundreds of millions of computers. After Google gained a dominant share with their web browser through Android bundling, paid installs with security updates for other software & through promoting the browser across their properties eventually Microsoft gave in and switched to building a web browser based on the Chromium core.

See also:

Cloaking has many legitimate uses which are within search guidelines. For example, changing user experience based on location is common on many popular websites.

See also:

- The Definitive Guide to Cloaking - Dan Kramer's guide to cloaking. I also interviewed Dan here.

- KloakIt - cheaply priced cloaking software

- Fantomaster - more expensive cloaking software

See also:

See also

- Google Touchgraph - interesting web application that shows the relationship between sites Google returns as being related to a site you enter.

Blog software programs are some of the most popular content management systems currently used on the web. Many content management systems have errors associated with them which make it hard for search engines to index content due to issues such as duplicate content.

Leaving enlightening and thoughtful comments on someone else's related website is one way to help get them to notice you.

See also:

- blog comment spam - the addition of low value or no value comments to other's websites

HTML comments in the source code of a document appear as <!-- your comment here -->. They can be viewed if someone types views the source code of a document, but do not appear in the regular formatted HTML rendered version of a document.

In the past some SEOs would stuff keywords in comment tags to help increase the page keyword density, but search has evolved beyond that stage, and at this point using comments to stuff keywords into a page adds to your risk profile and presents little ranking upside potential.

As the number of product databases online increases and duplicate content filters are forced to get more aggressive the keys to getting your information indexed are to have a site with enough authority to be considered the most important document on that topic, or to have enough non compacted information (for example, user reviews) on your product level pages to make them be seen as unique documents.

For example, if a search engine understands a phrase to be related to another word or phrase it may return results relevant to that other word or phrase even if the words you searched for are not directly associated with a result. In addition, some search engines will place various types of vertical search results at the top of the search results based on implied query related intent or prior search patterns by you or other searchers.

See also:

- Google AdSense is the most popular contextual advertising program.

Most offline ads have generally been much harder to track than online ads. Some marketers use custom phone numbers or coupon codes to tie offline activity to online marketing.

Here are a few common example desired goals

- a product sale

- completing a lead form

- a phone call

- capturing an email

- filling out a survey

- getting a person to pay attention to you

- getting feedback

- having a site visitor share your website with a friend

- having a site visitor link at your site

Bid management, affiliate tracking, and analytics programs make it easy to track conversion sources.

See also:

- Google Conversion University - free conversion tracking information

- Google Website Optimizer - free multi variable testing product offered by Google.

See also:

See also:

- Google AdWords - Google's pay per click ad program which allows you to buy search and contextual ads.

- Google AdSense - Google's contextual ad program.

- Microsoft AdCenter - Microsoft's pay per click ad platform.

- Yahoo! Search Marketing - Yahoo!'s pay per click ad platform

Many people use CPM as a measure of how profitable a website is or has the potential of becoming.

Since searches which are longer in nature tend to be more targeted in nature it is important to try to get most or all of a site indexed such that the deeper pages have the ability to rank for relevant long tail keywords. A large site needs adequate link equity to get deeply indexed. Another thing which may prevent a site from being fully indexed is duplicate content issues.

Sites which are well trusted or frequently updated may be crawled more frequently than sites with low trust scores and limited link authority. Sites with highly artificial link authority scores (ie: mostly low quality spammy links) or sites which are heavy in duplicate content or near duplicate content (such as affiliate feed sites) may be crawled less frequently than sites with unique content which are well integrated into the web.

See also:

- Google's Matt Cutts video on Google Crawling Patterns

- Matt Cutts post Indexing Timeline - mentions sites with unnatural link profiles may not be crawled as frequently or deeply

Note: Using external CSS files makes it easy to change the design of many pages by editing a single file. You can link to an external CSS file using code similar to the following in the head of your HTML documents

<link rel="stylesheet" href="http://www.seobook.com/style.css" type="text/css" />

See also

- W3C: CSS - official guidelines for CSS

- CSS Zen Garden - examples of various CSS layouts

- Glish.com - examples of various CSS layouts, links to other CSS resources

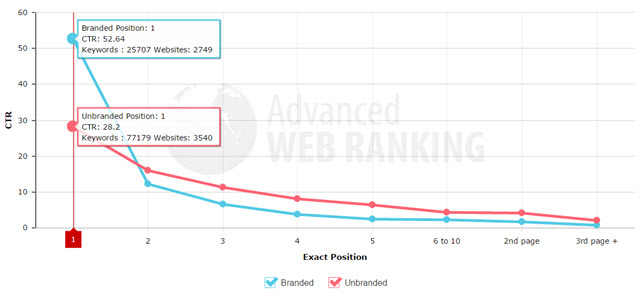

A search engine can determine if a particular search query is navigational (branded) versus informational or transactional by analyzing the relative CTR of different listings on the search result page & the CTR of people who have repeatedly searched for a particular keyword term. A navigational search tends to have many clicks on the top organic listing, while the CTR curve is often far flatter on informational or transactional searches.

See also:

- Consumer Ad Awareness in Search Results

- AWR CTR curve

- A Taxonomy of Web Search [PDF]

- Determining the Informational, Navigational & Transactional Intent of Web Queries [PDF]

- Automatic Query Type Identification Based on Click Through Information [PDF]

See also:

D

Most large high quality websites have at least a few dead links in them, but the ratio of good links to dead links can be seen as a sign of information quality.

When links grow naturally typically most high quality websites have many links pointing at interior pages. When you request links from other websites it makes sense to request a link from their most targeted relevant page to your most targeted relevant page. Some webmasters even create content based on easy linking opportunities they think up.

Dedicated servers tend to be more reliable than shared (or virtual) servers. Dedicated servers usually run from $100 to $500 a month. Virtual servers typically run from $5 to $50 per month.

A high deep link ratio is typically a sign of a legitimate natural link profile.

De-indexing may be due to any of the following:

- Pages on new websites (or sites with limited link authority relative to their size) may be temporarily de-indexed until the search engine does a deep spidering and re-cache of the web.

- During some updates search engines readjust crawl priorities.

- You need a significant number of high quality links to get a large website well indexed and keep it well indexed.

- Duplicate content filters, inbound and outbound link quality, or other information quality related issues may also relate to re-adjusted crawl priorities.

- Pages which have changed location and are not properly redirected, or pages which are down when a search engine tries to crawl them may be temporarily de-indexed.

- Search Spam:

- If a website tripped an automatic spam filter it may return to the search index anywhere from a few days to a few months after the problem has been fixed.

- If a website is editorially removed by a human you may need to contact the search engine directly to request reinclusion.

See also:

Some internet marketing platforms, such as AdCenter and AdWords, allow you to target ads at websites or searchers who fit amongst a specific demographic. Some common demographic data points are gender, age, income, education, location, etc.

See also:

- Nick Denton.org - official blog, where Nick often talks about business and his various blogs.

High quality directories typically prefer the description describes what the site is about rather than something that is overtly promotional in nature. Search engines typically

- use a description from a trusted directory (such as DMOZ or the Yahoo! Directory) for homepages of sites listed in those directories

- use the page meta description (especially if it is relevant to the search query and has the words from the search query in it)

- attempt to extract a description from the page content which is relevant for the particular search query and ranking page (this is called a snippet)

- or some combination of the above

See also:

Some directories cater to specific niche topics, while others are more comprehensive in nature. Major search engines likely place significant weight on links from DMOZ and the Yahoo! Directory. Smaller and less established general directories likely pull less weight. If a directory does not exercise editorial control over listings search engines will not be likely to trust their links at all.

Recovering from manual link penalties will often require removing some of lower quality inbound links & disavowing other low quality links. For automated link penalties, like Penguin, using the disavow tool should be sufficient for penalty recovery, however Google still has to crawl the pages to apply disavow to the links. It still may make sense to remove some lower quality links to diminish any future risks of manual penalties. With the rise of negative SEO, publishers in spammy industries may be forced to proactively use the disavow tool.

Document Freshness (see fresh content)

AOL shut down DMOZ on March 14, 2017. Some other sites aim to offer a continuation of the service.

- Curlie - an effort by some former DMOZ effort to create a next version of the directory which is actively maintained.

- ODP.org - a static snapshot of the directory as it appeared when it was shut down.

Some webmasters cloak thousands of doorway pages on trusted domains, and rake in a boatload of cash until they are caught and de-listed. If the page would have a unique purpose outside of search then search engines are generally fine with it, but if the page only exists because search engines exist then search engines are more likely to frown on the behavior.

See also:

See also:

Search engines do not want to index multiple versions of similar content. For example, printer friendly pages may be search engine unfriendly duplicates. Also, many automated content generation techniques rely on recycling content, so some search engines are somewhat strict in filtering out content they deem to be similar or nearly duplicate in nature.

See also:

- Duplicate Content Detection - video where Matt Cutts talks about the process of duplicate content detection

- Identifying and filtering near-duplicate documents

- Search Engine Patents On Duplicated Content and Re-Ranking Methods

- Stuntdubl: How to Remedy Duplicate Content

Some search queries might require significant time for a user to complete their information goals while other queries might be things which are quickly answered by a landing page, thus dwell time in isolation may not be a great relevancy signal. However search engines can also look at other engagement metrics like repeat visits, branded searches, relative CTR & if users clicking on a particular listing have a high POGO rate (by subsequently clicking on yet another different search result) to get an idea of a user's satisfaction with a particular webiste & fold these metrics into an algorithm like Panda.

In the past search engines were less aggressive at indexing dynamic content than they currently are. While they have greatly improved their ability to index dynamic content it is still preferable to use URL rewriting to help make dynamic content look static in nature.

E

See also:

Many paid links, such as those from quality directories, still count as signs of votes as long as they are also associated with editorial quality standards. If they are from sites without editorial control, like link farms, they are not likely to help you rank well. Using an algorithm similar to TrustRank, some search engines may place more trust on well known sites with strong editorial guidelines.

Please note that it is more important that copy reads well to humans than any boost you may think you will get by tweaking it for bots. If every occurrence of a keyword on a page is in emphasis that will make the page hard to read, convert poorly, and may look weird to search engines and users alike.

<em>emphasis</em> would appear as emphasis

Search engines may analyze end user behavior to help refine and improve their rankings. Sites which get a high CTR and have a high proportion of repeat visits from brand related searches may get a ranking boost in algorithms like Panda.

Brands are a popular form of entities, but many other forms of information like songs or movies are also known as entities. Information about entities may be shown in knowledge graph results.

If you are buying pay per click ads it is important to send visitors to the most appropriate and targeted page associated with the keyword they searched for. If you are doing link building it is important to point links at your most appropriate page when possible such that

- if anyone clicks the link they are sent to the most appropriate and relevant page

- you help search engines understand what the pages on your site are associated with

Some search marketers lacking in creativity tend to describe services sold by others as being unethical while their own services are ethical. Any particular technique is generally not typically associated with ethics, but is either effective or ineffective.

The only ethics issues associated with SEO are generally business ethics related issues. Two of the bigger frauds are

- Not disclosing risks: Some SEOs may use high risk techniques when they are not needed. Some may make that situation even worse by not disclosing potential risks to clients.

- Taking money & doing nothing: Since selling SEO services has almost no start up costs many of the people selling services may not actually know how to competently provide them. Some shady people claim to be SEOs and bilk money out of unsuspecting small businesses.

As long as the client is aware of potential risks there is nothing unethical about being aggressive.

In the past Google updated their index roughly once a month. Those updates were named Google Dances, but since Google shifted to a constantly updating index Google no longer does what was traditionally called a Google Dance.

See also:

- Matt Cutts Google Terminology Video - Matt talks about the history of Google Updates and the shift from Google Dances to everflux.

See also:

Some people believe in link hoarding, but linking out to other related resources is a good way to help search engines understand what your site is about. If you link out to lots of low quality sites or primarily rely on low quality reciprocal links some search engines may not rank your site very well. Search engines are more likely to trust high quality editorial links (both to and from your site).

F

See also:

Upload an image named favicon.ico in the root of your site to have your site associated with a favicon.

See also:

- HTML Kit - generate a favicon from a picture

- Favicon.co.uk - create a favicon online painting one pixel at a time.

Favorites (see bookmarks)

See also:

- Bloglines - popular web based feed reader

- Google Reader - popular web based feed reader

- My Yahoo! - allows you to subscribe to feed updates

- FeedDemon - desktop based feed reader

See also:

For example, if a site publishes significant duplicate content it may get a reduced crawl priority and get filtered out of the search results. Some search engines also have filters based on link quality, link growth rate, and anchor text. Some pages are also penalized for spamming.

See also:

Search engines tend to struggle indexing and ranking flash websites because flash typically contains so little relevant content. If you use flash ensure:

- you embed flash files within HTML pages

- you use a noembed element to describe what is in the flash

- you publish your flash content in multiple separate files such that you can embed appropriate flash files in relevant pages

Forward Links (see Outbound Links)

Given the popularity of server side includes, content management systems, and dynamic languages there really is no legitimate reason to use frames to build a content site today.

Many SEOs talk up fresh content, but fresh content does not generally mean re-editing old content. It more often refers to creating new content. The primary advantages to fresh content are:

- Maintain and grow mindshare: If you keep giving people a reason to pay attention to you more and more people will pay attention to you, and link to your site.

- Faster idea spreading: If many people pay attention to your site, when you come out with good ideas they will spread quickly.

- Growing archives: If you are a content producer then owning more content means you have more chances to rank. If you keep building additional fresh content eventually that gives you a large catalog of relevant content.

- Frequent crawling: Frequently updated websites are more likely to be crawled frequently.

- QDF: Google's query deserves freshness algorithm may boost the rankings of recently published documents for search queries where they believe users are looking for recent information.

The big risk of creating lots of "fresh" content for the sake of it is that many low cost content sources will have poor engagement metrics, which in turn will lead to a risk of the site being penalized by Panda. A good litmus test on this front is: if you didn't own your website would you still regularly visit it & read the new content published to it.

Freshness (see fresh content)

Froogle early name for the Google Shopping search tool. (see Google Shopping)

Many content management systems (such as blogging platforms) include FTP capabilities. Web development software such as Dreamweaver also comes with FTP capabilities. There are also a number of free or cheap FTP programs such as Cute FTP, Core FTP, and Leech FTP.

Fuzzy search technology is similar to stemming technology, with the exception that fuzzy search corrects the misspellings at the users end and stemming searches for other versions of the same core word within the index.

G

See also:

See also:

- Gladwell.com

- Gladwell's blog

- The Tipping Point - book about how ideas spread via a network of influencers (Connectors, Mavens, and Salesmen)

- Gladwell speech

See also:

- Seth's blog - Seth talks about marketing

- Purple Cow - Probably Seth's most popular book. It is about how to be remarkable. Links are citations or remarks. This book is a highly recommended for any SEO.

- All Marketers Are Liars - Book about creating and marketing authentic brand related stories in a low trust world.

- The Big Red Fez - Small quick book about usability errors common to many websites.

- Google speech - see Seth's speech at Google.

- Squidoo - community driven topical lens site created by Seth Godin

See also

- Google corporate history

- Google labs - new products Google is testing

- Google papers - research papers by Googlers

Google has a shared crawl cache between their various spiders, including vertical search spiders and spiders associated with ad targeting.

See also:

Google AdSense (see AdSense)

Google AdWords (see AdWords)

Google Base may also help Google better understand what types of information are commercial in nature, and how they should structure different vertical search products.

See also:

See also:

- Google search: miserable failure - shows pages people tried ranking for that search query

Typically it is easier to bowl new sites out of the results. Older established sites are much harder to knock out of the search results.

Major search indexes are constantly updating. Google refers to this continuous refresh as everflux.

The second meaning of Google Dance is a yearly party at Google's corporate headquarters which Google holds for search engine marketers. This party coincides with the San Jose Search Engine Strategies conference.

See also:

- Matt Cutts Google Terminology Video - Matt talks about the history of Google Updates and the shift from Google Dances to everflux.

Keyword research tool provided by Google which estimates the competition for a keyword, recommends related keywords, and will tell you what keywords Google thinks are relevant to your site or a page on your site.

See also:

- Google Keyword Tool - tool offering all the above mentioned features

Google Search Console (see Google Webmaster Tools)

Please note that the best way to submit your site to search engines and to keep it in their search indexes is to build high quality editorial links.

See also:

- Google Webmaster Central - access to Google Sitemaps and other webmaster related tools.

If you do not submit a bid price the tool will return an estimated bid price necessary to rank #1 for 85% of Google's queries for a particular keyword.

See also:

See also:

While some aspects of the guidelines might be rather clear, other aspects are blurry, and based on inferences which may be incorrect like: "Don't deceive your users." Many would (and indeed have) argued Google's ad labeling within their own search results is deceptive, Google has ran ads for illegal steroids & other shady offers, etc. The ultimate goal of the Webmaster Guidelines is to minimize the ROI of SEO & discourage active investment into SEO.

For background on how arbitrary and uneven enforcement actions are compare this to this, then read this.

See also:

- Google Webmaster Guidelines

- Google's Advertisement Labeling in 2014

- Consumer Ad Awareness in Search Results

- FTC: letter to search engines [PDF]

- How a Career Con Man Led a Federal Sting That Cost Google $500 Million

See also:

While some of the Google Webmaster Tools may seem useful, it is worth noting Google uses webmaster registration data to profile & penalize other websites owned by the same webmaster. It is worth preceeding with caution when registering with Google, especially if your website is tied to a business model Google both hates & has cloned in their search results - like hotel affiliates.

Google renamed Google Webmaster Tools as Google Search Console in 2015.

See also:

H

Heading elements go from H1 to H6 with the lower numbered headings being most important. You should only use a single H1 element on each page, and may want to use multiple other heading elements to structure a document. An H1 element source would look like:

<h1>Your Topic</h1>

Heading elements may be styled using CSS. Many content management systems place the same content in the main page heading and the page title, although in many cases it may be preferential to mix them up if possible.

See also:

The title of an article or story.

While some sites may get away with it for a while, generally the risk to reward ratio is inadequate for most legitimate sites to consider using hidden text.

See also:

See also:

- Jon Klienberg's Authoritative Sources in a Hyperlinked Environment [PDF]

As far as SEO goes, a home page is typically going to be one of the easier pages to rank for some of your more competitive terms, largely because it is easy to build links at a home page. You should ensure your homepage stays focused and reinforces your brand though, and do not assume that most of your visitors will come to your site via the home page. If your site is well structured many pages on your site will likely be far more popular and rank better than your home page for relevant queries.

Host (see Server)

As a note of caution, make sure you copy your current .htaccess file before editing it, and do not edit it on a site that you can't afford to have go down unless you know what you are doing.

See also:

Some newer web pages are also formatted in XHTML.

See also:

Topical hubs are sites which link to well trusted within their topical community. A topical authority is a page which is referenced from many topical hub sites. A topical hub is a page which references many authorities.

See also:

- Mike Grehan on Topic Distillation [PDF]

- Jon Klienberg's Authoritative sources in a hyperlinked environment [PDF]

- Jon Klienberg's home page

See also:

Code examples:

- default version of a document

<link rel="alternate" href="http://example.com/" hreflang="x-default" /> - German language content which applies to all regions

<link rel="alternate" href="http://example.com/" hreflang="de" /> - English language inside the UK

<link rel="alternate" href="http://example.com/" hreflang="en-GB" />

See also:

I

IDF = log ( total documents in database / documents containing the term )

Most search engines allow you to see a sample of links pointing to a document by searching using the link: function. For example, using link:www.seobook.com would show pages linking to the homepage of this site (both internal links and inbound links). Due to canonical URL issues www.site.com and site.com may show different linkage data. Google typically shows a much smaller sample of linkage data than competing engines do, but Google still knows of and counts many of the links that do not show up when you use their link: function.

When search engines search they search via reverse indexes by words and return results based on matching relevancy vectors. Stemming and semantic analysis allow search engines to return near matches. Index may also refer to the root of a folder on a web server.

It is preferential to use descriptive internal linking to make it easy for search engines to understand what your website is about. Use consistent navigational anchor text for each section of your site, emphasizing other pages within that section. Place links to relevant related pages within the content area of your site to help further show the relationship between pages and improve the usability of your website.

Good information architecture considers both how humans and search spiders access a website. Information architecture suggestions:

- focus each page on a specific topic

- use descriptive page titles and meta descriptions which describe the content of the page

- use clean (few or no variables) descriptive file names and folder names

- use headings to help break up text and semantically structure a document

- use breadcrumb navigation to show page relationships

- use descriptive link anchor text

- link to related information from within the content area of your web pages

- improve conversion rates by making it easy for people to take desired actions

- avoid feeding search engines duplicate or near-duplicate content

Internal Navigation (see Navigation)

See also:

Inverted File (see Reverse Index)

Many SEOs refer to unique C class IP addresses. Every site is hosted on a numerical address like aa.bb.cc.dd. In some cases many sites are hosted on the same IP address. It is believed by many SEOs that if links come from different IP ranges with a different number somewhere in the aa.bb.cc part then the link may count more than links from the same local range and host.

IP delivery (see cloaking)

Italics (see emphasis)

J

Search engines do not index most content in JavaScript. In AJAX, JavaScript has been combined with other technologies to make web pages even more interactive.

K

Long tail and brand related keywords are typically worth more than shorter and vague keywords because they typically occur later in the buying cycle and are associated with a greater level of implied intent.

When people use keyword stuffed copy it tends to read mechanically (and thus does not convert well and is not link worthy), plus some pages that are crafted with just the core keyword in mind often lack semantically related words and modifiers from the related vocabulary (and that causes the pages to rank poorly as well).

See also:

- The Keyword Density of Non Sense

- Keyword Density Analysis Tool

- Search Engine Friendly Copywriting - What Does 'Write Naturally' Mean for SEO?

See also:

- MSN Search Funnels - shows keywords people search for before or after they search for another keyword

See also:

Example keyword discovery methods:

- using keyword research tools

- looking at analytics data or your server logs

- looking at page copy on competing sites

- reading customer feedback

- placing a search box on your site and seeing what people are looking for

- talking to customers to ask how and why they found and chose your business

Short list of the most popular keyword research tools:

- SEO Book Keyword Research Tool - free keyword tool cross references all of my favorite keyword research tools. In addition to linking to traditional keyword research tools, it also links to tools such as Google Suggest, Buzz related tools, vertical databases, social bookmarking and tagging sites, and latent semantic indexing related tools.

- Bing Ad Intelligence - Excel plugin offering keyword data from Microsoft. Requires an active Bing Ads advertiser account.

- Google AdWords Keyword Planner - powered from Google search data, but requires an active AdWords advertiser account.

- Wordtracker - paid, powered from Dogpile and MetaCrawler. Due to small sample size their keyword database may be easy to spam.

- More keyword tools

- Overture Term Suggestion - free, powered from Yahoo! search data. Heavily biased toward over representing commercial queries, combines singular and plural versions of a keyword into a single data point. This tool was originally located at http://inventory.overture.com/d/searchinventory/suggestion/ but was taken offline years ago.

Please note that most keyword research tools used alone are going to be highly inaccurate at giving exact quantitative search volumes. The tools are better for qualitative measurements. To test the exact volume for a keyword it may make sense to set up a test Google AdWords campaign.

When people use keyword stuffed copy it tends to read mechanically (and thus does not convert well and is not link worthy), plus some pages that are crafted with just the core keyword in mind often lack semantically related words and modifiers from the related vocabulary (and that causes the pages to rank poorly as well).

See also:

Keyword Suggestion Tools (see Keyword Research Tools)

See also:

- Jon Klienberg's Authoritative sources in a hyperlinked environment [PDF]

- Jon Klienberg's home page

- Hypersearching the Web

The goal of the knowledge graph is largely three-fold:

- Answer user questions quickly without requiring them to leave the search results, particularly for easy to answer questions about known entities.

- Displace the organic search result set by moving it further down the page, which in turn may cause a lift in the clickthrough rates on the ads shown above the knowledge graph.

- Some knowledge graph listings (like hotel search, book search, song search, lyric search) also include links to other Google properties or other forms of ads within them, further boosting the monetization of the search result page.

L

When Google AdWords launched affiliates and arbitrage players made up a large portion of their ad market, but as more mainstream companies have spent on search marketing, Google has done many measures to try to keep their ads relevant.

Most major search engines consider links as a vote of trust.

See also:

A few general link building tips:

- build conceptually unique linkworthy high quality content

- create viral marketing ideas that want to spread and make people talk about you

- mix your anchor text

- get deep links

- try to build at least a few quality links before actively obtaining any low quality links

- register your site in relevant high quality directories such as DMOZ, the Yahoo! Directory, and Business.com

- when possible try to focus your efforts mainly on getting high quality editorial links

- create link bait

- try to get bloggers to mention you on their blogs

- It takes a while to catch up with the competition, but if you work at it long enough and hard enough eventually you can enjoy a self-reinforcing market position

See also:

- 101 Ways to Build Link Popularity in 2006 - 101 ways you should and should not build links

- Filthy Linking Rich [PDF] - Mike Grehan article about how top rankings are self reinforcing

When links occur naturally they generally develop over time. In some cases it may make sense that popular viral articles receive many links quickly, but in those cases there are typically other signs of quality as well, such as:

- increased usage data

- increase in brand related search queries

- traffic from the link sources to the site being linked at

- many of the new links coming from new pages on trusted domains

See also:

See also:

Link Disavow (see Disavow)

Log files do not typically show as much data as analytics programs would, and if they do, it is generally not in a format that is as useful beyond seeing the top few stats.

Generally link hoarding is a bad idea for the following reasons:

- many authority sites were at one point hub sites that freely linked out to other relevant resources

- if you are unwilling to link out to other sites people are going to be less likely to link to your site

- outbound links to relevant resources may improve your credibility and boost your overall relevancy scores

"Of course, folks never know when we're going to adjust our scoring. It's pretty easy to spot domains that are hoarding PageRank; that can be just another factor in scoring. If you work really hard to boost your authority-like score while trying to minimize your hub-like score, that sets your site apart from most domains. Just something to bear in mind." - Quote from Google's Matt Cutts

See also:

- Why Paris Hilton Is Famous (Or Understanding Value In A Post-Madonna World) - article about how being a platform (ie: someone who freely links out) makes it easier to become an authority.

For competitive search queries link quality counts much more than link quantity. Google typically shows a smaller sample of known linkage data than the other engines do, even though Google still counts many of the links they do not show when you do a link: search.

Links may broken for a number of reason, but four of the most common reasons are:

- a website going offline

- linking to content which is temporary in nature (due to licensing structures or other reasons)

- moving a page's location

- changing a domain's content management system

Most large websites have some broken links, but if too many of a site's links are broken it may be an indication of outdated content, and it may provide website users with a poor user experience. Both of which may cause search engines to rank a page as being less relevant.

See also:

- Xenu Link Sleuth is a free software program which crawls websites to find broken links.

Pages or sites which receive a huge spike of new inbound links in a short duration may hit automated filters and/or be flagged for manual editorial review by search engineers.

See also:

How does the long tail applies to keywords? Long Tail keywords are more precise and specific, thus have a higher value. As of writing this definition in the middle of October 2006 my leading keywords for this month are as follows:

| #reqs | search term |

|---|---|

| 1504 | seo book |

| 512 | seobook |

| 501 | seo |

| 214 | google auctions |

| 116 | link bait |

| 95 | aaron wall |

| 94 | gmail uk |

| 89 | search engine optimization |

| 86 | trustrank |

| 78 | adsense tracker |

| 73 | latent semantic indexing |

| 71 | seo books |

| 69 | john t reed |

| 67 | dear sir |

| 67 | book.com |

| 64 | link harvester |

| 64 | google adwords coupon |

| 58 | seobook.com |

| 55 | adwords coupon |

| 15056 | [not listed: 9,584 search terms] |

Notice how the nearly 10,000 unlisted terms account for roughly 10 times as much traffic as I got from my core brand related term (and this site only has a couple thousand pages and has a rather strong brand).

See also:

- The Long Tail - official blog

- The Long Tail - the book

See also:

See also:

- Quintura Search - free LSI type keyword research tool.

- Patterns in Unstructured Data - free paper describing how LSI works

- SEO Book articles on LSI: #1 & #2 (Google may not be using LSI, but they are certainly using technologies with similar functions and purpose.)

- Johnon Go Words - article about how adding certain relevant words to a page can drastically improve its relevancy for other keywords

M

Sites which had a manual penalty would typically have a warning show in Google Webmaster Tools, whereas sites which have an automated penalty like Panda or Penguin would not.

See also:

- Another step to rewarding high-quality sites

- Understanding the Google Penguin Algorithm

- Disavow & Link Removal: Understanding Google

- Pandas, Penguins & Popsicles

See also:

- Inktomi Spam Database Left Open to Public - article about Inktomi's spam database from 2001

- Search Bistro - links to Google's General Guidelines on Random Query Evaluation [PDF] and Google's Spam Guide for Raters

- Google Image Labeler - example of how humans can be used to review content

See also:

- Mechanical Turk

- Google Image Labeler - image labeling game

- Human Computation Speech Video at Google

See also:

- Techmeme - meme tracker which shows technology ideas that are currently spreading on popular technology blogs

A good meta description tag should:

- be relevant and unique to the page;

- reinforce the page title; and

- focus on including offers and secondary keywords and phrases to help add context to the page title.

Relevant meta description tags may appear in search results as part of the page description below the page title.

The code for a meta description tag looks like this

<meta name="Description" content="Your meta description here. " / >

See also:

- Free meta tag generator - offers a free formatting tool and advice on creating meta description tags.

The code for a meta keyword tag looks like this

<meta name="Keywords" content="keyword phrase, another keyword, yep another, maybe one more ">

Many people spammed meta keyword tags and searchers typically never see the tag, so most search engines do not place much (if any) weight on it. Many SEO professionals no longer use meta keywords tags.

See also:

- Free meta tag generator - offers a free formatting tool and advice on creating meta description tags.

A meta refresh looks like this

<meta http-equiv="refresh" content="10;url=http://www.site.com/folder/page.htm">

Generally in most cases it is preferred to use a 301 or 302 redirect over a meta refresh.

See also:

- Myriad Search - an ad free meta search engine

- The page title is highly important.

- The meta description tag is somewhat important.

- The meta keywords tag is not that important.

Sites with strong mindshare, top rankings, or a strong memorable brand are far more likely to be linked at than sites which are less memorable and have less search exposure. The link quality of mindshare related links most likely exceeds the quality of the average link on the web. If you sell non-commodities, personal recommendations also typically carry far greater weight than search rankings alone.

See also:

- Filthy Linking Rich [PDF] - Mike Grehan article about how top rankings are self reinforcing

Generally search engines prefer not to index duplicate content. The one exception to this is that if you are a hosting company it might make sense to offer free hosting or a free mirror site to a popular open source software site to build significant link equity.

Movable Type is typically much harder to install that Wordpress is.

See also:

See also:

Microsoft replaced MSN search with Bing, which was launched on June 3, 2009.

See also:

N

Natural Link (see Editorial Link)

Natrual Search (see Organic Search Results)

It is best to use regular HTML navigation rather than coding your navigation in JavaScript, Flash, or some other type of navigation which search engines may not be able to easily index.

Over time Google shifts many link building strategies from being considered white hat to gray hat to black hat. A competitor (or a person engaging in reputation management) can point a bunch of low-quality links with aggressive anchor text at a page in order to try to get the page filtered from the search results. If these new links cause a manual penalty, then the webmaster who gets penalized may not only have to disavow the new spam links, but they may have to try to remove or disavow links which were in place for 5 or 10 years already which later became "black hat" ex-post-facto. There are also strategies to engage in negative SEO without using links.

See also:

Search is a broad field, but as you drill down each niche consists of many smaller niches. An example of drilling down to a niche market

- search

- search marketing, privacy considerations, legal issues, history of, future of, different types of vertical search, etc.

- search engine optimization, search engine advertising

- link building, keyword research, reputation monitoring and management, viral marketing, SEO copywriting, Google AdWords, information architecture, etc.

Generally it is easier to compete in small, new, or underdeveloped niches than trying to dominate large verticals. As your brand and authority grow you can go after bigger markets.

The code to use nofollow on a link appears like

<a href="http://wwwseobook.com.com" rel="nofollow">anchor text </a>Nofollow can also be used in a robots meta tag to prevent a search engine from counting any outbound links on a page. This code would look like this

<META NAME="ROBOTS" CONTENT="INDEX, NOFOLLOW">

Google's Matt Cutts also pushes webmasters to use nofollow on any paid links, but since Google is the world's largest link broker, their advice on how other people should buy or sell links should be taken with a grain of salt. Please note that it is generally not advised to practice link hoarding as that may look quite unnatural. Outbound links may also boost your relevancy scores in some search engines.

In March 2020 Google began treating the nofollow element on a link as a hint rather than a directive.

Not Provided (see Keyword (Not Provided))

O

See also:

Open Directory Project, The (see DMOZ)

On the web open source is a great strategy for quickly building immense exposure and mindshare.

See also:

Most clicks on search results are on the organic search results. Some studies have shown that 60 to 80% + of clicks are on the organic search results.

Some webmasters believe in link hoarding, but linking out to useful relevant related documents is an easy way to help search engines understand what your website is about. If you reference other resources it also helps you build credibility and leverage the work of others without having to do everything yourself. Some webmasters track where their traffic comes from, so if you link to related websites they may be more likely to link back to your site.

See also:

- Live Search: LinkFromDomain:SEOBook.com - shows pages that my site links at.

See also:

See also:

P

Co-founder of Google.

Since PageRank is widely bartered Google's relevancy algorithms had to move away from relying on PageRank and place more emphasis on trusted links via algorithms such as TrustRank.

The PageRank formula is:

PR(A) = (1-d) + d (PR(T1)/C(T1) + ... + PR(Tn)/C(Tn))

PR= PageRank

d= dampening factor (~0.85)

c = number of links on the page

PR(T1)/C(T1) = PageRank of page 1 divided by the total number of links on page 1, (transferred PageRank)

In text: for any given page A the PageRank PR(A) is equal to the sum of the parsed partial PageRank given from each page pointing at it multiplied by the dampening factor plus one minus the dampening factor.

See also:

- The Anatomy of a Large-Scale Hypertextual Web Search Engine

- The PageRank Citation Ranking: Bringing Order to the Web

Page Title (see Title)

See also:

- Directories such as the Yahoo! Directory and Business.com allow websites to be listed for a flat yearly cost.

- Yahoo! Search allows webmasters to pay for inclusion for a flat review fee and a category based cost per click.

Paid Link (see Text Link Ads)

Sites which have broad, shallow content (like eHow) along with ad heavy layouts, few repeat visits, and high bounce rates from search visitors are likely to get penalized. Highly trusted & well known brands (like Amazon.com) are likely to receive a ranking boost. In addition to the article databases & other content farms, many smaller ecommerce sites were torched by Panda.

Sites which had a manual penalty would typically have a warning show in Google Webmaster Tools, whereas sites which have an automated penalty like Panda or Penguin would not.

See also:

- Google: More guidance on building high-quality sites

- Questioning Amit Singhal's Questions

- The Moral Concept: Panda List

- SEObyTheSea: The Panda Patent

Publishers publishing contextual ads are typically paid per ad click. Affiliate marketing programs pay affiliates for conversions - leads, downloads, or sales.

If a site is penalized algorithmically the site may start ranking again after a certain period of time after the reason for being penalized is fixed. If a site is penalized manually the penalty may last an exceptionally long time or require contacting the search engine with a reinclusion request to remedy.

Some sites are also filtered for various reasons.

See also:

- Google -30 rank penalty - an example of a penalty

When Google launched the Penguin algorithm they obfuscated the emphasis on links by also updating some on-page keyword stuffing classifiers at the same time. Initially they also failed to name the Penguin update & simply called it a spam update, only later naming it after plenty of blowback due to false positives. In many cases they run updates on top of one another or in close proximity to obfuscate which update caused an issue for a particular site. Sometimes Google would put out a wave of link warnings and manual penalties around the time Penguin updated & other times Penguin and Panda would run right on top of one another.

Sites which were hit by later versions of Penguin could typically recover on the next periodic Penguin update after disavowing low quality links inside Google Webmaster Tools, but sites which were hit by the first version of Penguin had a much harder time recovering. Sites which had a manual penalty would typically have a warning show in Google Webmaster Tools, whereas sites which have an automated penalty like Panda or Penguin would not.

See also:

- Another step to rewarding high-quality sites

- Understanding the Google Penguin Algorithm

- Disavow & Link Removal: Understanding Google

- Pandas, Penguins & Popsicles

See also:

See also:

See also:

A high POGO rate can be seen as a poor engagement metric, which in turn can flag a site to be ranked lower using an algorithm like Panda.

See also:

- AdWords - Google's PPC ad platform

- AdCenter - Microsoft's PPC ad platform

- Yahoo! Search Marketing - Yahoo!'s PPC ad platform

Search spam and the complexity of language challenge the precision of search engines.

A page which has words near one another may be deemed to be more likely to satisfy a search query containing both terms. If keyword phrases are repeated an excessive number of times, and the proximity is close on all the occurrences of both words it may also be a sign of unnatural (and thus potentially low quality) content.

Q

Newly published content may be seen as fresh content, but older content may also be seen as fresh if it has been recently updated, has a big spike in readership, and/or has a large spike in it's link velocity (the rate of growth of inbound links).

See also:

There are a variety of ways to define what a quality link is, but the following are characteristics of a high quality link:

- Trusted Source: If a link is from a page or website which seems like it is trustworthy then it is more likely to count more than a link from an obscure, rarely used, and rarely cited website. See TrustRank for one example of a way to find highly trusted websites.

- Hard to Get: The harder a link is to acquire the more likely a search engine will be to want to trust it and the more work a competitor will need to do to try to gain that link.

- Aged: Some search engines may trust links from older resources or links that have existed for a length of time more than they trust brand new links or links from newer resources.

- Co-citation: Pages that link at competing sites which also link to your site make it easy for search engines to understand what community your website belongs to. See Hilltop for an example of an algorithm which looks for co-citation from expert sources.

- Related: Links from related pages or related websites may count more than links from unrelated sites.

- In Content: Links which are in the content area of a page are typically going to be more likely to be editorial links than links that are not included within the editorial portion of a page.

While appropriate anchor text may also help you rank even better than a link which lacks appropriate anchor text, it is worth noting that for competitive queries Google is more likely to place weight on a high quality link where the anchor text does not match than trusting low quality links where the anchor text matches.

Query refinement is both a manual and an automated process. If searchers do not find their search results as being relevant they may search again. Search engines may also automatically refine queries using the following techniques:

- Google OneBox: promotes a vertical search database near the top of the search result. For example, if image search is relevant to your search query images may be placed near the top of the search results.

- Spell Correction: offers a did you mean link with the correct spelling near the top of the results.

- Inline Suggest: offers related search results in the search results. Some engines also suggest a variety of related search queries.

Some search toolbars also aim to help searchers auto complete their search queries by offering a list of most popular queries which match the starting letters that a searcher enters into the search box.

R